The evolution of graphics in video games has been remarkable, progressing significantly over the years. However, as the quality of graphics has advanced, so have the expectations of gamers. Nowadays, gamers demand a level of visual fidelity that was once considered unimaginable, even a decade ago when dedicated 3D render farms were the norm. Despite the impressive visuals in today’s top games, achieving realism involves utilizing rendering shortcuts that generate highly convincing approximations of our perception of the world. Yet, no matter how precise these approximations become, there’s often a sense of something missing – a certain lack of realism.

Indeed, the gaming industry is on the brink of a transformative leap, and this comes in the form of Ray Tracing.

What is Ray Tracing?

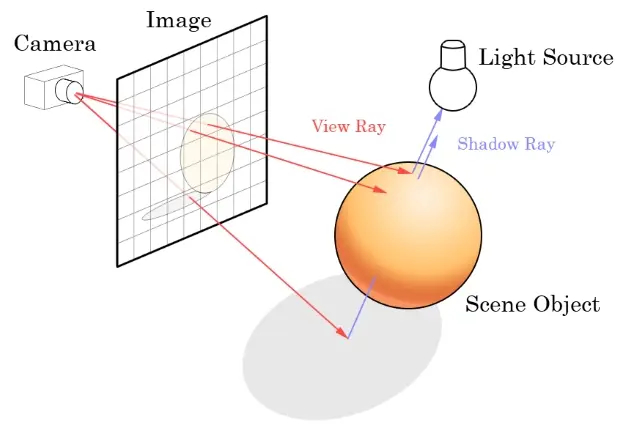

Ray-tracing is a technique used to compute 3D scenes that closely mirrors the way humans perceive the world. It specifically replicates how light behaves in our surroundings. This method, which has been present since the inception of 3D rendering, involves the meticulous calculation of light beams from a source to their destination. It takes into account how these beams interact with, bounce off, traverse through, and are obstructed by objects within a scene. The significant advantage of Ray Tracing lies in its capability to create scenes that, with sufficient time and computational power, can be virtually indistinguishable from reality or virtual reality.

How Ray Tracing Enhances Graphics in Games?

In the graphics arena, both AMD and Nvidia, industry leaders, unveiled their respective advancements in Ray Tracing at GDC 2018. At the “State of Unreal” opening session during GDC 2018, Epic Games, in collaboration with NVIDIA and ILMxLAB, conducted the first public demonstration of real-time ray tracing in Unreal Engine. Simultaneously, AMD announced its collaboration with Microsoft to contribute to defining, refining, and supporting the future of DirectX12, including Ray Tracing.

Nvidia’s presentation showcased an experimental cinematic demo featuring Star Wars characters from The Force Awakens and The Last Jedi, developed with Unreal Engine 4. The demo utilized NVIDIA’s RTX technology for Volta GPUs, accessible through Microsoft’s DirectX Ray Tracing API (DXR). Notably, an iPad equipped with ARKit served as a virtual camera, focusing on intricate details in close-up views.

“Tony Tamasi, NVIDIA’s senior vice president of content and technology, articulated that achieving real-time ray tracing has been a longstanding aspiration in the graphics and visualization industry. He highlighted the achievement made possible by NVIDIA RTX technology, Volta GPUs, and Microsoft’s new DXR API, emphasizing that it signifies the realization of the long-awaited era of real-time ray tracing”

While AMD did not present a proof of concept, they have affirmed their collaboration with Microsoft. Both companies are expected to make support for Ray Tracing technology, utilizing the DXR API, available to developers later in the year.

With the prospect of high-end graphics fidelity now in the hands of developers, anticipation grows for upcoming games to showcase more realistic graphics, incorporating accurate representations of lighting conditions and enhanced sharpness. While demanding on GPUs, Ray Tracing holds the promise of narrowing the gap between reality and virtual reality.

0 Comments