Google has unveiled the Gemma 3 series of open models, boasting impressive performance despite their compact size. According to the tech giant, Gemma 3 models can run on a single Nvidia H100 GPU while delivering performance comparable to much larger models. The lineup includes AI models with 1B, 4B, 12B, and 27B parameters, designed for local use on laptops and smartphones.

With the exception of the smallest Gemma 3 1B model, all Gemma 3 models are inherently multimodal, capable of processing both images and videos. Additionally, they support over 140 languages, making them highly versatile for multilingual applications. Despite their compact size, Google has impressively packed extensive knowledge into these efficient models.

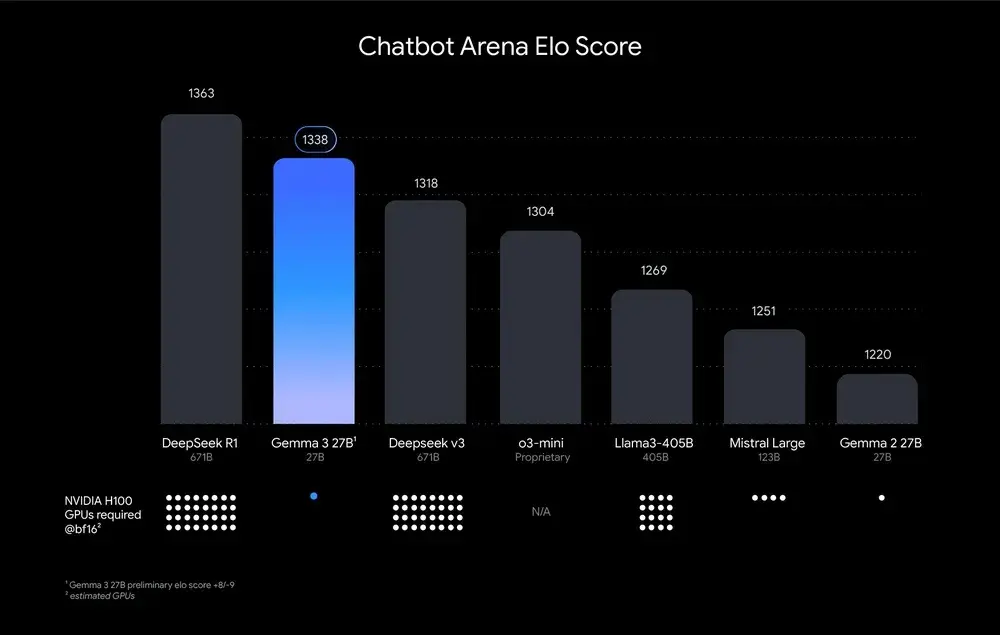

In terms of performance, the largest Gemma 3 27B model surpasses significantly larger models like DeepSeek V3 671B, Llama 3.1 405B, Mistral Large, and o3-mini in the LMSYS Chatbot Arena. It achieved an Elo score of 1,338, placing it just below the DeepSeek R1 reasoning model, which scored 1,363.

It’s remarkable that such a compact model can compete with frontier models. Google attributes this success to “a novel post-training approach that enhances all capabilities, including math, coding, chat, instruction following, and multilingual support.“

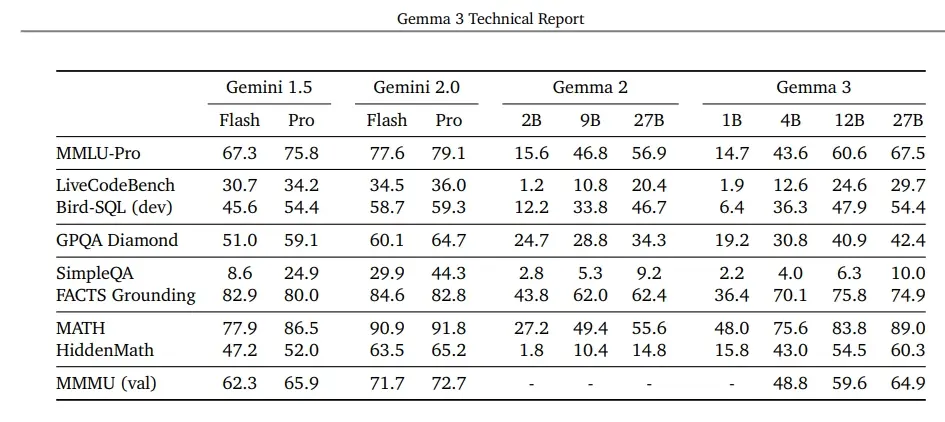

Additionally, Gemma 3 models are trained using an enhanced version of knowledge distillation, enabling the 27B model to nearly match the performance of Gemini 1.5 Flash.

Gemma 3 models feature a 128K context window and support function calling and structured output. With its compact size and strong performance, Google has introduced a highly competitive open model to challenge DeepSeek R1 and Llama 3 405B. Developers will appreciate Gemma 3’s multimodal and multilingual capabilities, along with the flexibility of hosting open weights.