After months of anticipation, OpenAI finally launched ChatGPT’s Advanced Voice feature for paid subscribers, and I couldn’t wait to put it to the test. This new feature promises to deliver smooth, natural conversations with real-time interruptions just like Gemini Live, which, let’s be honest, didn’t exactly wow me when I tried it. But here’s the kicker: ChatGPT Advanced Voice comes with native audio input and output, so I was eager to see if it lives up to the hype.

Natural Conversations

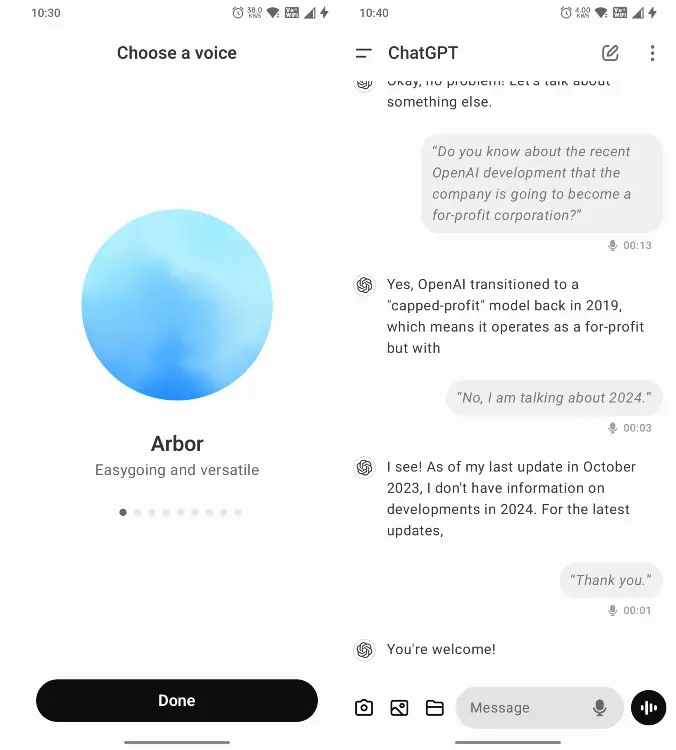

First impressions? ChatGPT Advanced Voice Mode offers an impressively smooth conversation flow. There are nine different voices you can choose from, each with a distinct, upbeat vibe. Two of my favorites, Arbor and Vale, come with charming British accents that add a touch of elegance (yes, I’m a sucker for British accents). Interestingly, OpenAI decided to retire the “Sky” voice, which some said was eerily similar to Scarlett Johansson in Her probably for the best! Here’s a quick rundown of the available voices:

- Arbor – Easygoing and versatile

- Breeze – Animated and earnest

- Cove – Composed and direct

- Ember – Confident and optimistic

- Juniper – Open and upbeat

- Maple – Cheerful and candid

- Sol – Savvy and relaxed

- Spruce – Calm and affirming

- Vale – Bright and inquisitive

Just as advertised, ChatGPT Advanced Voice mode allows interruptions during conversation. Start talking while it’s mid-sentence, and it’ll automatically pause Gemini Live does something similar, but this felt more seamless.

I asked ChatGPT Advanced Voice about OpenAI’s recent decision to become a for-profit corporation, but it wasn’t aware of the development. With no internet access and a knowledge cutoff of October 2023, like GPT-4o, it couldn’t provide the latest details.

In contrast, Gemini Live stands out, as it can browse the web and retrieve up-to-date information on any topic. I also attempted to have a deep conversation about its existence and whether it experiences emotions, but ChatGPT Advanced Voice continually avoided the subject.

Here’s where ChatGPT Advanced Voice mode really shines: remembering context. Unlike Gemini Live, which tends to forget what we’ve discussed earlier, ChatGPT recalls details from the same conversation. If we talked about something earlier in the session, it quickly pulls that context back into the conversation, which saves me from constantly re-explaining myself. I found that super convenient.

Another cool feature is the ability to set custom instructions. You can provide details about who you are, where you live, or even how you prefer your responses. It personalizes the interaction, which makes conversations feel more tailored and meaningful. Overall, when it comes to two-way interaction, ChatGPT Advanced Voice is a solid contender. It’s not perfect, but for natural and engaging conversations, it’s definitely a step in the right direction.

Practice Interview

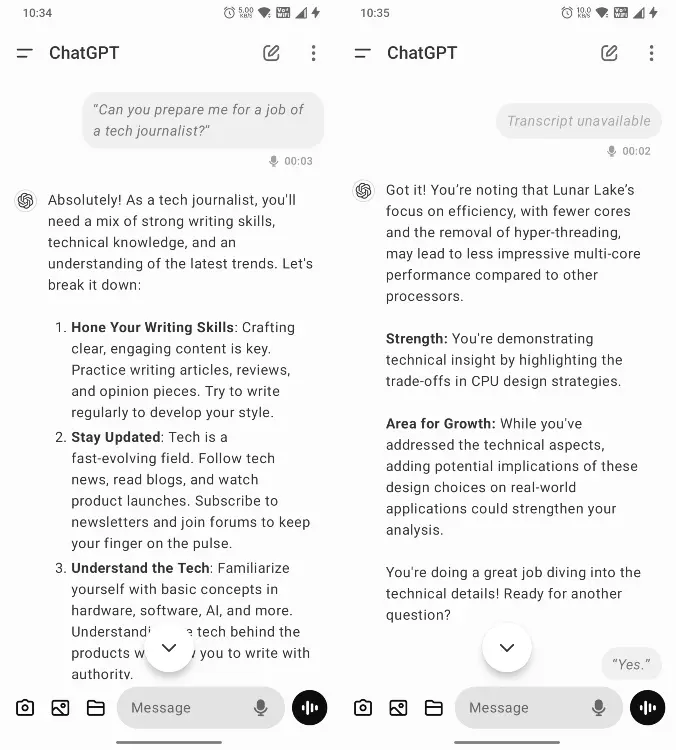

At the GPT-4o launch in May, OpenAI showcased how ChatGPT Advanced Voice mode excels at helping users prepare for interviews. While it doesn’t support cameras yet, you can still have ChatGPT Advanced Voice simulate an interviewer to help you get ready for your next job interview.

I asked ChatGPT Advanced Voice Mode to help me prepare for a job as a tech journalist, and it provided a list of essential skills I should know. It also asked me several tech-related questions, offering insights into my strengths and areas for improvement. As the conversation progressed, it felt like I was being interviewed by someone knowledgeable, keeping me engaged with challenging and thought-provoking questions.

Story Recitation

One of the standout features of ChatGPT Advanced Voice Mode is its ability to recite stories with dramatic flair, using different intonations. I asked it to narrate a story for my (fictional) child in a dramatic style, and it delivered. To make it even more entertaining, I instructed ChatGPT Advanced Voice to enhance the performance by adding whispers, laughter, and growls, making the story more engaging and fun.

I’ve tried similar features with Gemini Live, but ChatGPT Advanced Voice feels more expressive. It gasped in surprise, cheered with excitement, and seamlessly transitioned between characters. It’s genuinely impressive in the way it brings stories to life.

That said, there’s still room for improvement. While it’s fun and engaging, the experience isn’t quite as dramatic as what OpenAI showcased in its demos. There’s potential here for even more creativity and depth, and I’m excited to see where it goes from here!

Sing Me a Lullaby

When I first saw the demo of ChatGPT Advanced Voice Mode back in May, I was thrilled to see it could sing! Naturally, I couldn’t wait to give it a try, so I asked it to sing me a lullaby. To my surprise, it simply replied, “I can’t sing or hum.” It seems that OpenAI has scaled back the singing capabilities of ChatGPT Advanced Voice for reasons that remain a mystery.

I didn’t give up there I also asked it to perform an opera or recite a rhyme. Again, I received a terse response: “I can’t produce music.” It appears that copyright issues are limiting its ability to sing. So, if you were hoping for a personalized lullaby to soothe your little one to sleep, it looks like that dream isn’t quite a reality yet.

Counting Numbers FAST

This next test was both fascinating and fun, really pushing the limits of the multimodal GPT-4o voice mode. I asked ChatGPT Advanced Voice to count from 1 to 50 at lightning speed, and it nailed it! Midway through, I encouraged it to go even faster, and it did. Then, I switched gears and asked it to slow down, and it followed my instructions perfectly.

In this experiment, Gemini Live didn’t quite measure up; it simply read the generated text through a basic text-to-speech engine. In contrast, ChatGPT Advanced Voice shines with its native audio input/output capabilities, truly impressing in this area.

Multilingual Conversation

When it came to multilingual conversations, ChatGPT Advanced Voice Mode performed reasonably well during my tests. I started chatting in English, switched to Hindi, and then to Bengali. While it managed to keep the conversation going, I did encounter a few bumps along the way—the transitions between languages weren’t as smooth as I’d hoped. Interestingly, when I tested Gemini Live, it effortlessly understood my queries in various languages, showcasing a more seamless experience.

Voice Impressions

Now, let’s talk about voice impressions. While ChatGPT Advanced Voice Mode can’t mimic the voices of famous figures like Morgan Freeman or David Attenborough, it excels at accents. I asked it to speak with a Chicago accent, and it nailed it impressively! It also did a fantastic job with Scottish and Indian accents. Overall, when it comes to chatting in different regional styles, ChatGPT Advanced Voice is pretty impressive.

Limitations of ChatGPT Advanced Voice

Despite being a step up from Gemini Live with its comprehensive multimodal experience, ChatGPT Advanced Voice has its limitations. After putting it through its paces, I found that it lacks personality. The conversations still feel somewhat robotic, and it often misses those delightful human-like expressions that make chatting engaging.

For instance, it doesn’t laugh when I share a funny story, and it struggles to detect the speaker’s mood. Plus, it can’t interpret animal sounds or other environmental noises something OpenAI had showcased during the launch. I genuinely believe that users will soon experience a more robust multimodal interaction, but for now, we’re working with a version of ChatGPT Advanced Voice that feels a bit limited.