Open Interpreter is an exciting open-source project that combines various AI functionalities like the ChatGPT plugin, Code Interpreter, and more to create a versatile AI solution for any platform. With Open Interpreter, you can interact with your system at the operating system level, access and manipulate files, folders, programs, browse the internet, and much more, all from a user-friendly Terminal interface. To set up and use Open Interpreter on your local PC, follow the instructions and guides provided by the project’s developers. This project has the potential to make AI a powerful tool for a wide range of tasks and interactions on your computer.

Before you proceed with using Open Interpreter, there are a few important things to keep in mind: 1. API Key: To fully utilize Open Interpreter, you should have access to the GPT-4 API key. The GPT-3.5 model may not work as well and could result in errors when running code. While the GPT-4 API is more expensive, it provides access to more utilities on your system. 2. Local Models: It's advisable not to run the models locally unless you are well-versed in the building process. The project might have bugs, especially when using local models on Windows. Additionally, running larger models effectively may require powerful hardware specifications for better performance.

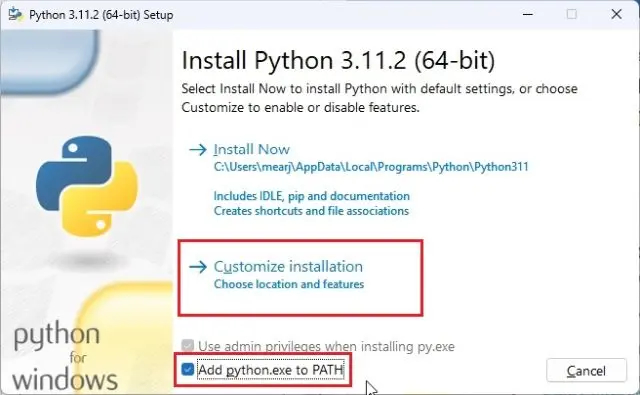

Setting Up the Python Environment

1. Install Python and Pip: Make sure you have Python and Pip installed on your computer.

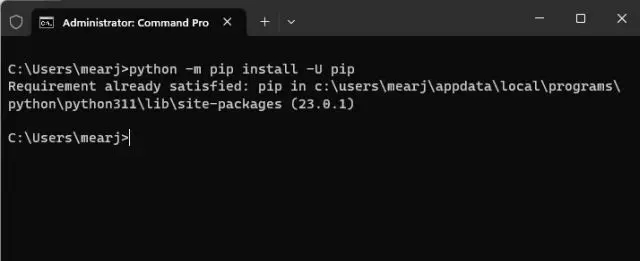

2. Update Pip: Open your Terminal (for Mac or Linux) or Command Prompt (CMD) for Windows and run the following command to update Pip to the latest version:

python -m pip install -U pip

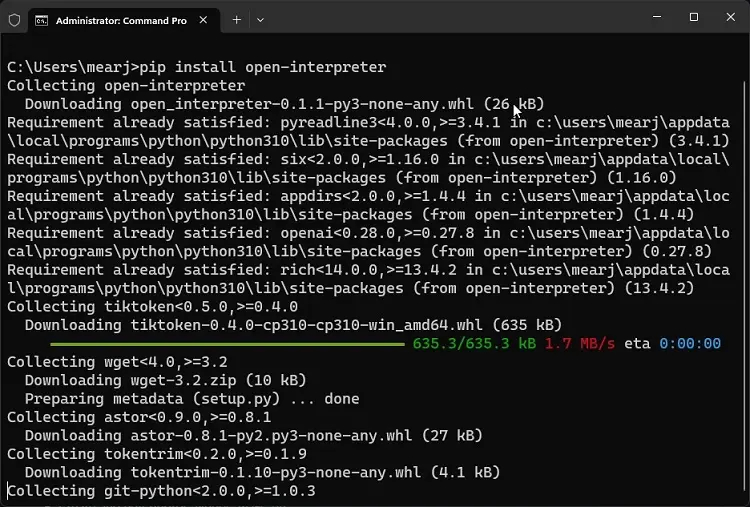

3. Install Open Interpreter: Run the following command in your Terminal or CMD to install Open Interpreter on your machine:

pip install open-interpreter

Setting Up Open Interpreter on Your PC

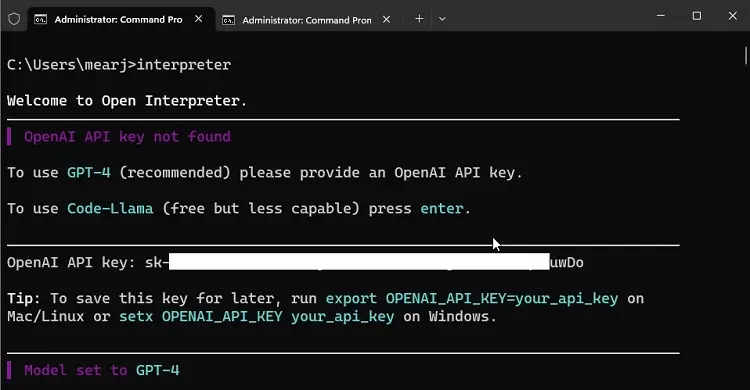

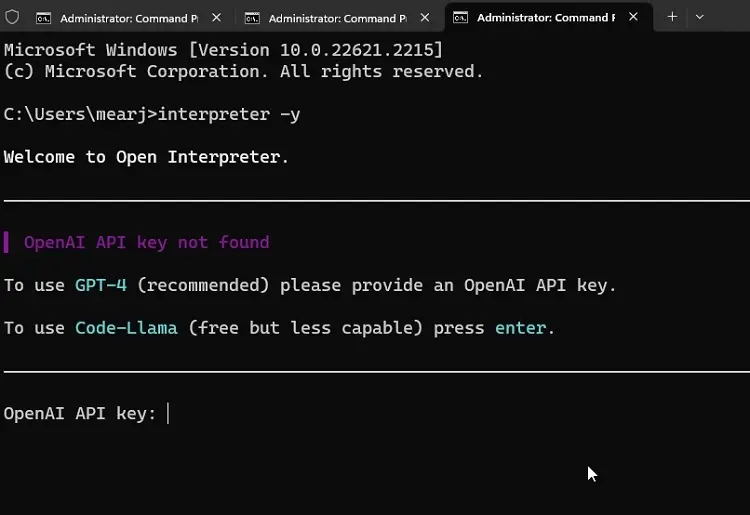

1. Select the Model: You have several options for models, so choose the one that suits your access and requirements:

- For the GPT-4 model. If you have access to the GPT-4 API from OpenAI.

interpreter

- For the GPT-3.5 model. Available for free users.

interpreter --fast

- Run the Code-llama model locally. This is free to use but requires good resources on your computer.

interpreter --local

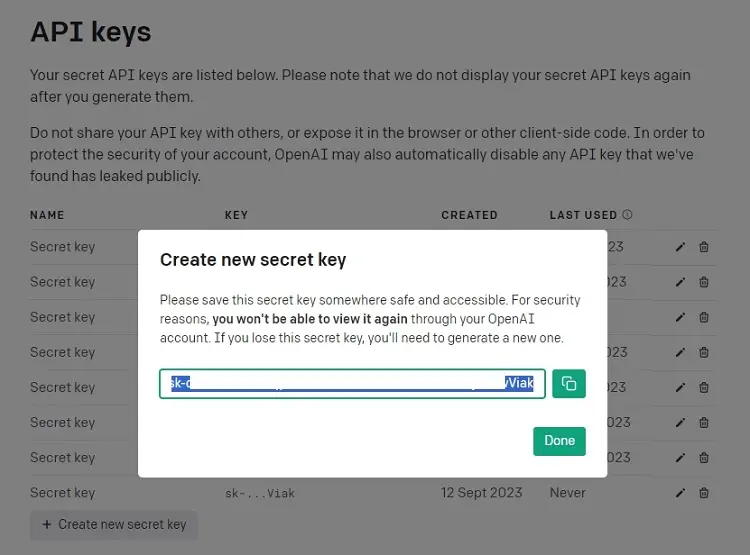

2. Get an API Key: If you’re going with the OpenAI GPT-4 model, obtain an API key from OpenAI’s website. Select “Generate a new secret key” and then copy the key.

3. Paste the API Key in your Terminal or CMD, paste the API key and press Enter to proceed. You can exit Open Interpreter at any time by pressing “Ctrl + C.”

Using Open Interpreter on Your PC

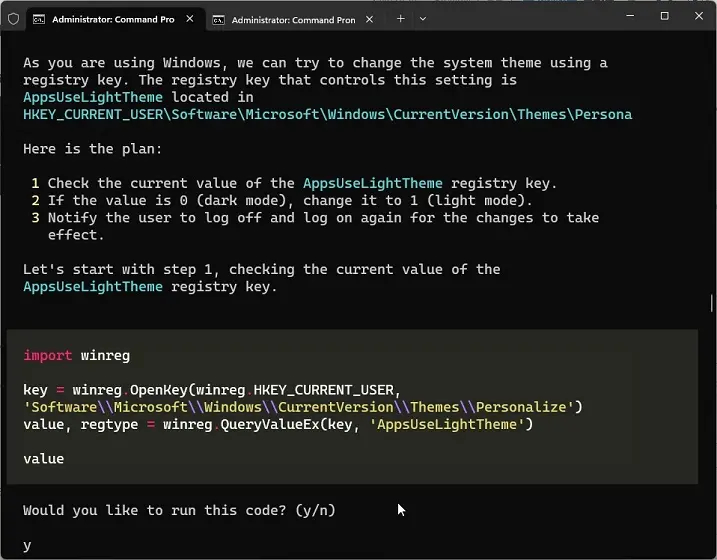

1. Open Interpreter successfully set your system to dark mode by creating a Registry key on your Windows computer.

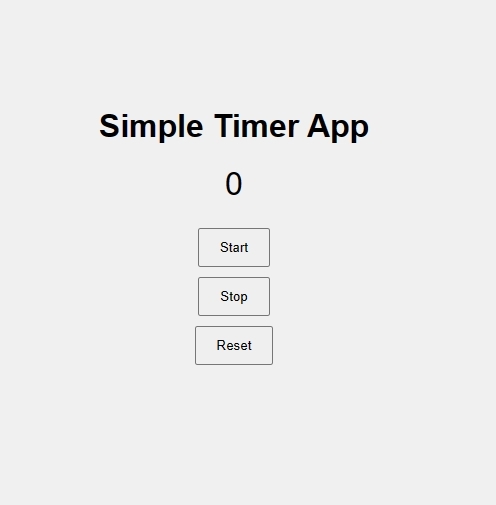

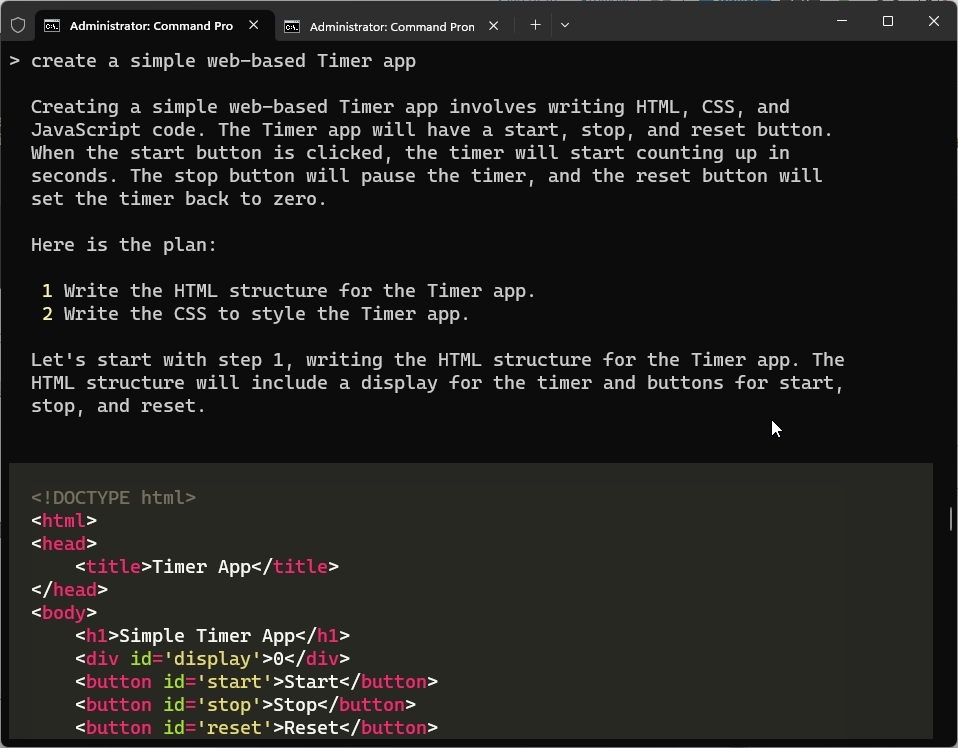

2. You used Open Interpreter to create a simple web-based timer app. This demonstrates the potential for AI to be a powerful tool for application development.

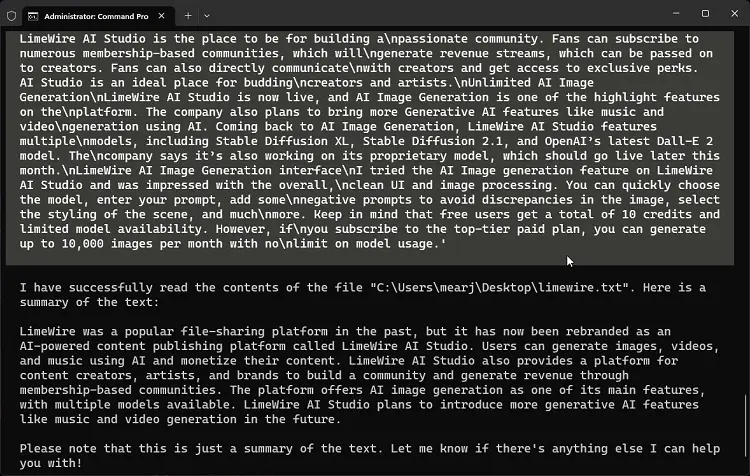

3. Open Interpreter was able to summarize a local text document for you, showcasing its text processing capabilities.

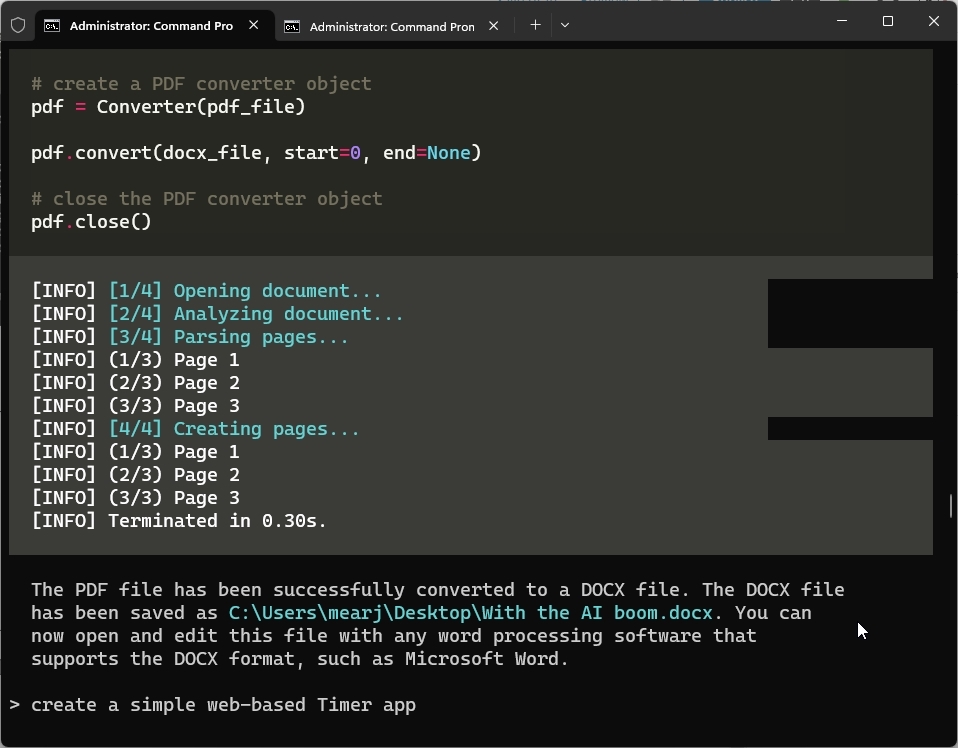

4. Open Interpreter effortlessly converted a PDF file to DOCX format, which can be a helpful task for document management.

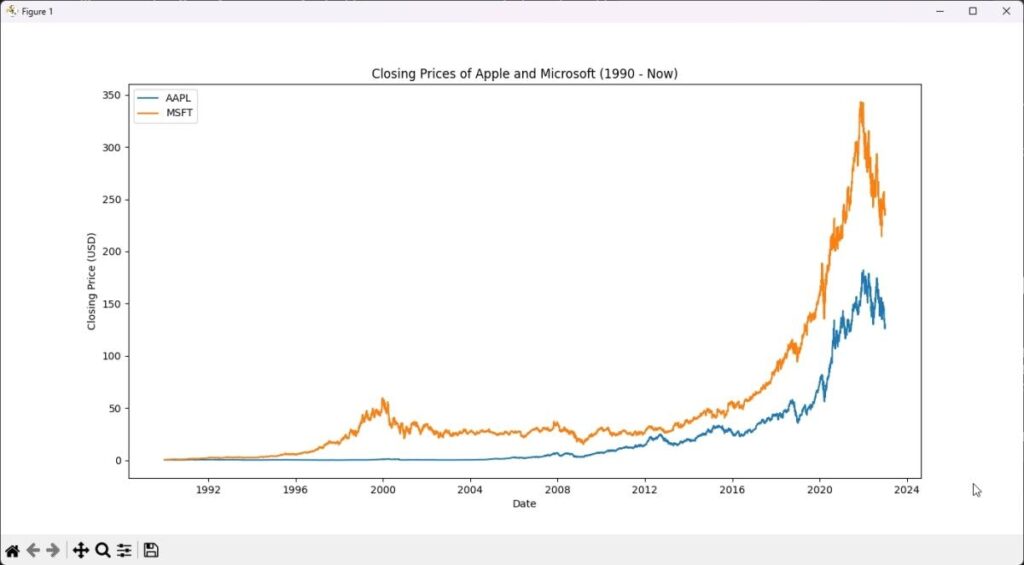

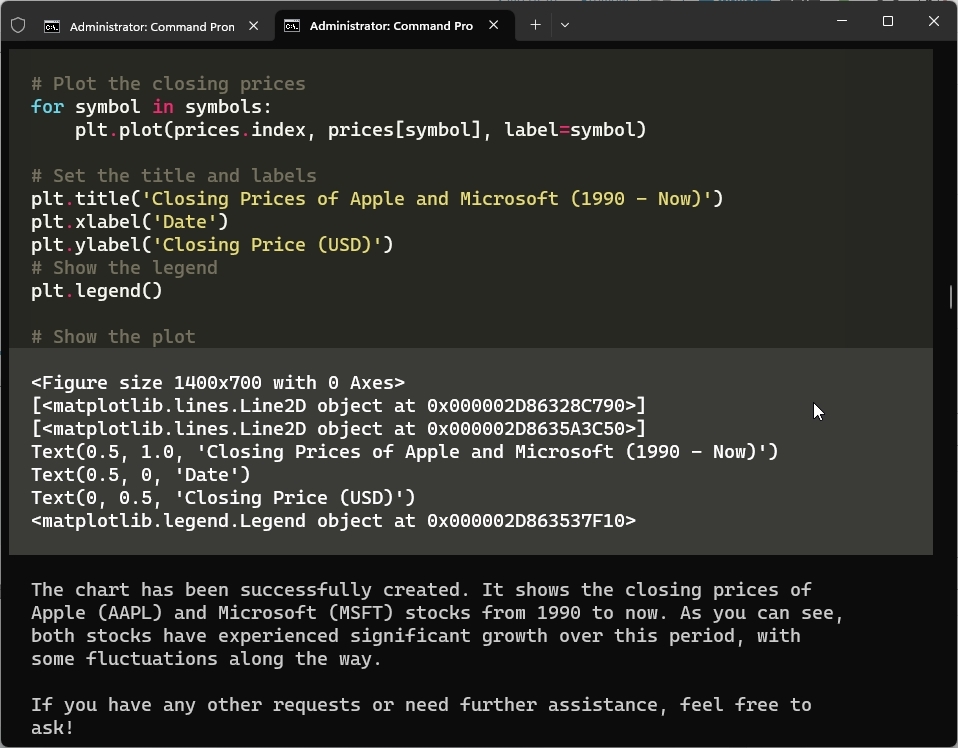

5. Open Interpreter fetched data from the internet and displayed stock prices for Apple and Microsoft in a visual chart, demonstrating its data retrieval and visualization abilities.

Useful Tips for Local Usage of Open Interpreter

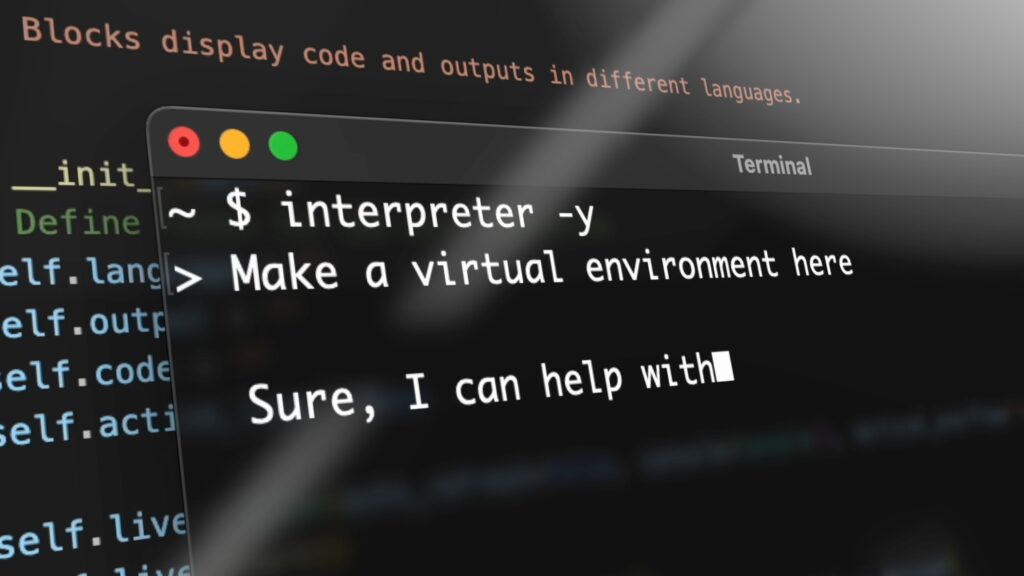

1. Open Interpreter typically asks for your permission before running code. To avoid repetitive prompts, you can start Open Interpreter with the -p flag, as you mentioned.

interpreter -y

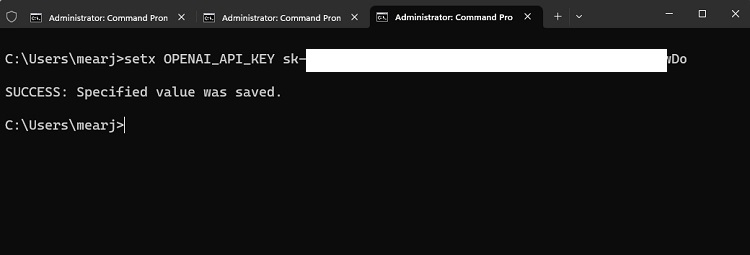

2. You can save time by setting your OpenAI API key in the command-line interface. Replace “your_api_key” with your actual key to make this permanent configuration.

- Windows

setx OPENAI_API_KEY your_api_key

- macOS and Linux

export OPENAI_API_KEY=your_api_key

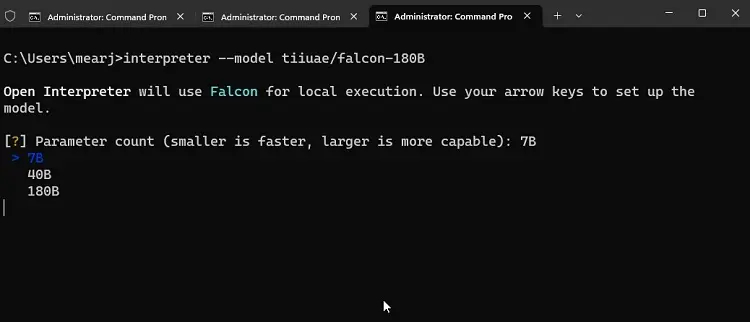

3. If you prefer using different models hosted on Hugging Face, you can specify them by providing the repository ID. This allows you to work with a wide range of open-source AI models.

interpreter --model tiiuae/falcon-180B

These configurations can streamline your interactions with Open Interpreter and make your workflow more efficient. If you encounter difficulties with Open Interpreter, you can explore alternative AI coding tools for a smoother coding experience.