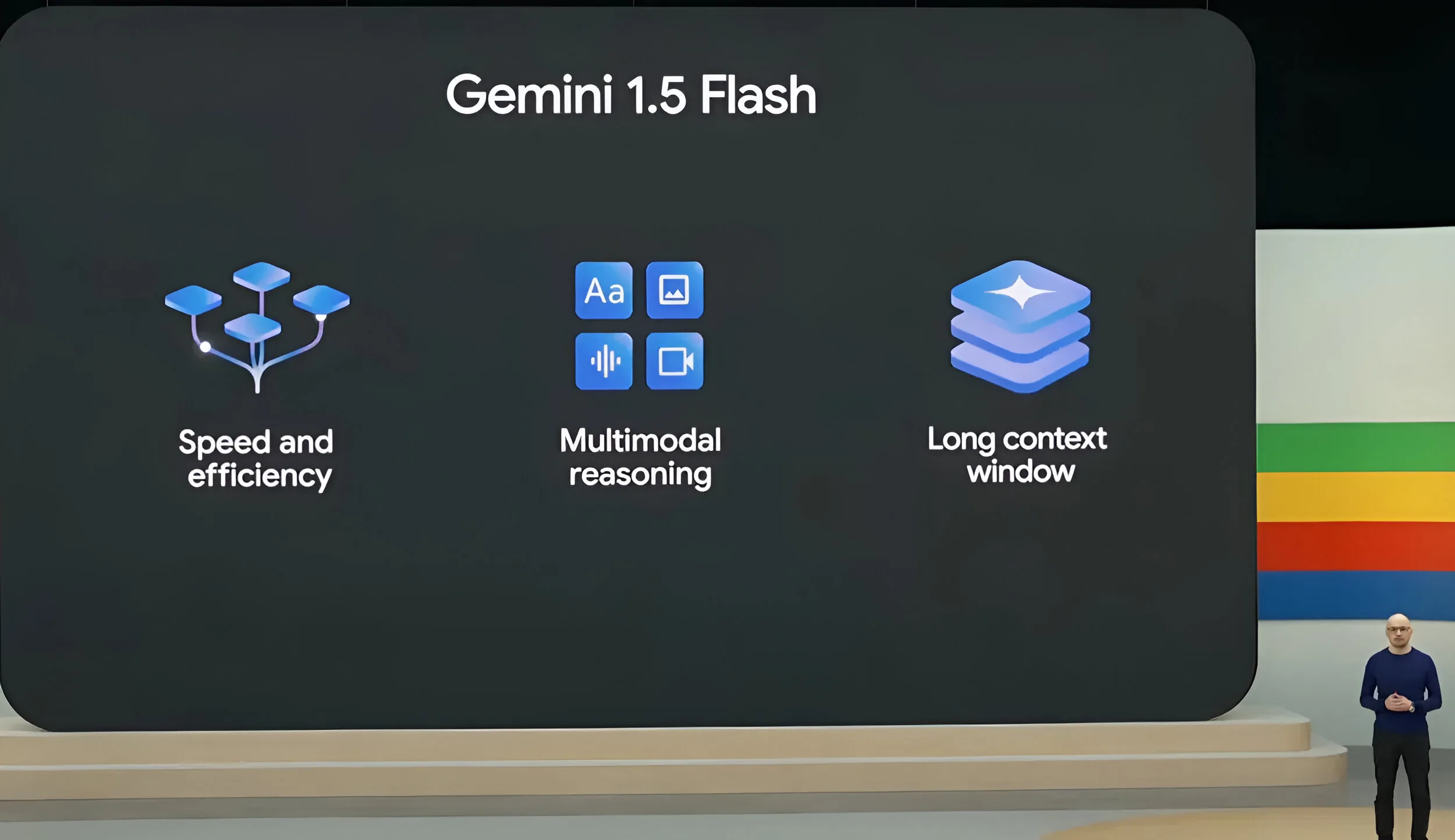

At the Google I/O 2024 event, Google unveiled not only the enhanced Gemini 1.5 Pro model but also introduced a new offering named Gemini 1.5 Flash. This model, focused on speed and efficiency, is a lightweight variant that boasts all the multimodal reasoning capabilities and a large context window of 1 million tokens, akin to the Pro model.

Designed for tasks prioritizing low latency and efficiency, the Gemini 1.5 Flash model is essentially a compact version, akin to Anthropic’s Haiku, yet incorporating the latest advancements. Google has not disclosed the parameter size of this model.

To explore the Gemini 1.5 Flash model, you can visit Google AI Studio (visit) and immediately begin testing it. Access to the model is instant, with availability in over 200 countries worldwide. Additionally, developers and enterprise customers can access the Flash model on Vertex AI.

The Gemini 1.5 Flash model is expected to surpass other compact models such as Google Gemma, Mistral 7B, and Phi-3 in terms of power. With its native multimodal capabilities, it can handle various data types, including text, audio files, images, and videos. What are your thoughts on this new addition to the Gemini lineup? Share your comments below.