Apple’s Generative AI plans remain largely undisclosed. However, the recent unveiling of their family of Open-source large language models suggests a shift towards enabling AI to operate locally on Apple devices. These models, named OpenELM (Open-source Efficient Language Models), are specifically engineered for on-device execution rather than relying on cloud servers. They can be accessed on the Hugging Face Hub, a central platform for sharing AI code and datasets.

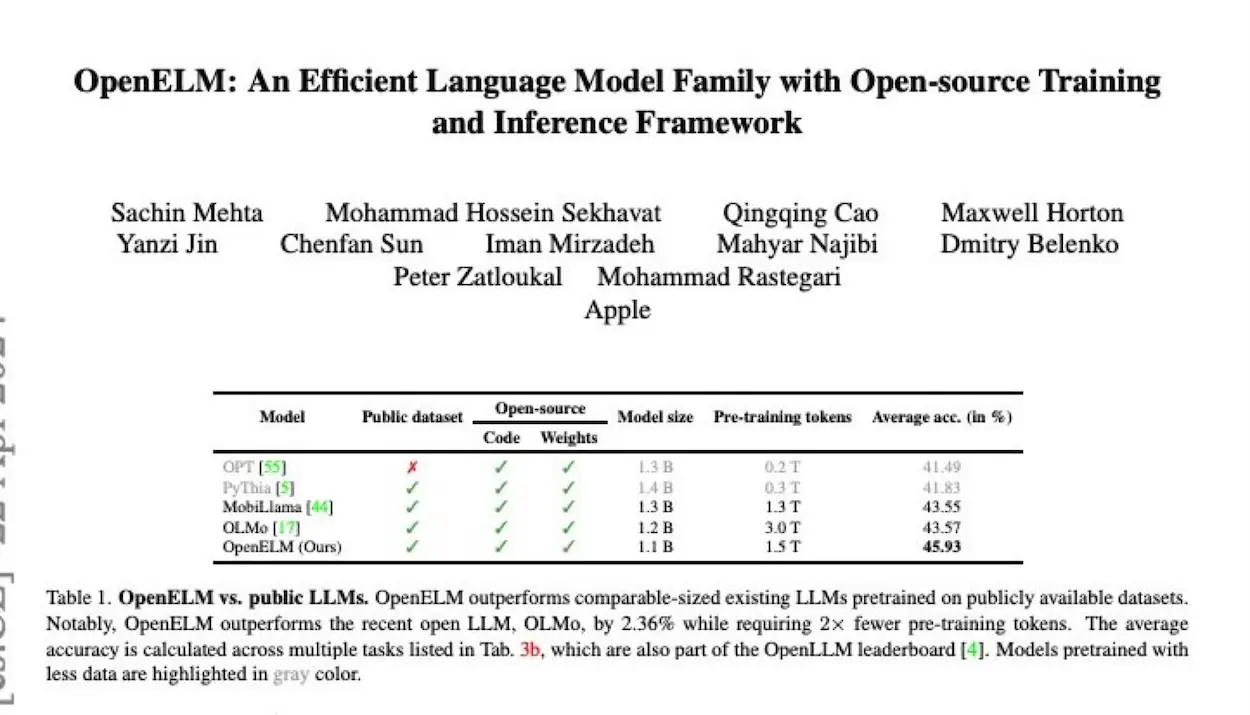

During testing, Apple found that OpenELM demonstrates comparable performance to other open language models despite being trained with less data.

The white paper outlines a total of eight OpenELM models, with four pre-trained using the CoreNet library and the other four being instruction-tuned models. To enhance overall accuracy and efficiency, Apple implements a layer-wise scaling strategy in these open-source LLMs.

“To this end, we release OpenELM, a state-of-the-art open language model. OpenELM uses a layer-wise scaling strategy to efficiently allocate parameters within each layer of the transformer model, leading to enhanced accuracy. For example, with a parameter budget of approximately one billion parameters, OpenELM exhibits a 2.36% improvement in accuracy compared to OLMo while requiring 2× fewer pre-training tokens.“- Apple

Apple not only provided the final trained model but also shared the code, training logs, and multiple model iterations. The project’s researchers express optimism that this approach will accelerate advancements and yield “trustworthy results” in natural language AI.

“Diverging from prior practices that only provide model weights and inference code, and pre-train on private datasets, our release includes the complete framework for training and evaluation of the language model on publicly available datasets, including training logs, multiple checkpoints, and pre-training configurations. We also release code to convert models to MLX library for inference and fine-tuning on Apple devices. This comprehensive release aims to empower and strengthen the open research community, paving the way for future open research endeavors.”– Apple

In addition, Apple stated that the release of the OpenELM models will “empower and enrich the open research community” with cutting-edge language models. These open-source models enable researchers to investigate potential risks, data, and biases inherent in the models. The models are readily available for developers to use either as-is or with necessary modifications.

In February, Apple’s CEO Tim Cook hinted at Generative AI features coming to Apple devices later in the year. He later reiterated that the company is dedicated to delivering groundbreaking AI experiences.

Apple has previously released several other AI models, but it has not yet integrated AI capabilities into its devices. However, it is anticipated that the upcoming iOS 18 will introduce a range of new AI features, and the release of OpenELM may be the latest part of Apple’s behind-the-scenes preparations.

According to a recent report by Mark Gurman, the AI features in iOS 18 will largely rely on an on-device large language model, offering privacy and speed advantages. More details are expected to be revealed when Apple announces iOS 18 and other software upgrades at its WWDC on June 10.