At Google Cloud Next 2024, the tech giant revealed new cloud hardware and a suite of products geared toward enterprise users. Notably, the Imagen 2 model stands out, enabling the creation of short, four-second video clips from text inputs.

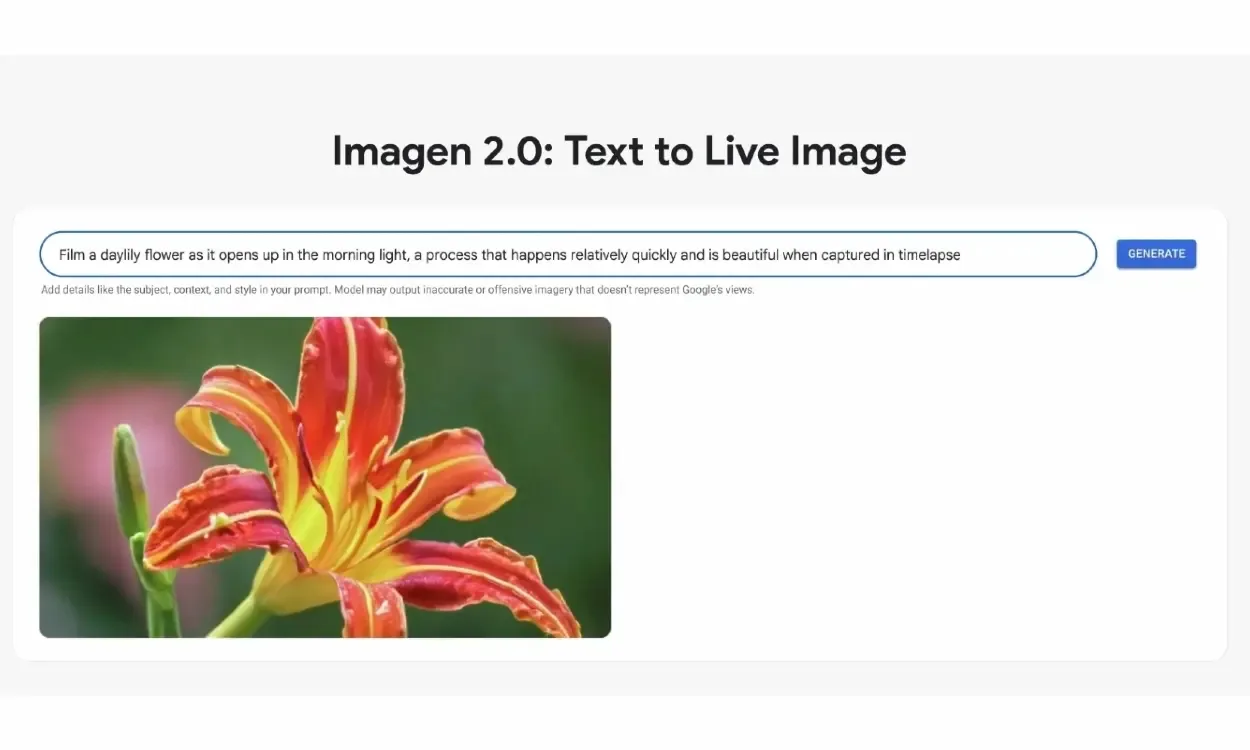

While Imagen 2 remains a text-to-image model, Google now refers to it as a text-to-live image model. Unlike typical AI-generated videos that incorporate static photos and limited motion, Imagen 2 offers varying camera angles and scene consistency.

However, it’s worth noting that the model’s output is limited to low-resolution video clips at 640 x 360 pixels. Google is targeting Imagen 2 toward enterprise users, particularly marketers and creatives seeking to quickly produce short clips for advertising campaigns and other purposes.

Additionally, Google is implementing its SynthID technology to apply an invisible watermark to AI-generated clips and images. The company claims that SynthID can withstand edits and compression. Furthermore, Google has enhanced the image generation model to address safety and bias concerns.

It’s worth noting that Google faced criticism recently for its decision not to generate images of individuals with white skin. Following this incident, Google halted human image generation, and even after two months, the restriction on Gemini remains in place.

Despite this, Imagen 2 has been released for general use on Vertex AI for enterprise clients. It now includes support for inpainting and outpainting, allowing AI-driven image editing to expand borders or add/remove elements from images. OpenAI has also introduced image editing capabilities to DALL-E-generated images recently.

While Imagen 2 can create video clips up to four seconds long, its competitiveness with other text-to-video generators is uncertain. Runway, for example, offers video generation up to 18 seconds at higher resolutions, and OpenAI has introduced its impressive Sora model. To match these models, Google may need to develop a more powerful diffusion model.