During MWC 2024, Qualcomm unveiled its AI Hub, enabling on-device AI experiences across various devices. The chip manufacturer boasts a robust AI engine capable of delivering intelligent computing on a wide range of platforms, from smartphones to PCs.

The Qualcomm AI Hub offers a collection of fully optimized AI models for local download and execution on your device. Additionally, it seamlessly integrates with popular AI frameworks such as Google’s TensorFlow and Meta AI’s PyTorch as part of the Qualcomm AI Stack.

Qualcomm AI Hub Optimizes Over 75 AI Models

Qualcomm’s AI Hub boasts over 75 optimized AI models and offers up to 4x faster AI inferencing compared to competitors. These models cater to diverse tasks like image segmentation, text generation, and speech recognition. Moreover, Qualcomm AI Hub can empower multimodal AI models capable of local visual, auditory, and verbal perception.

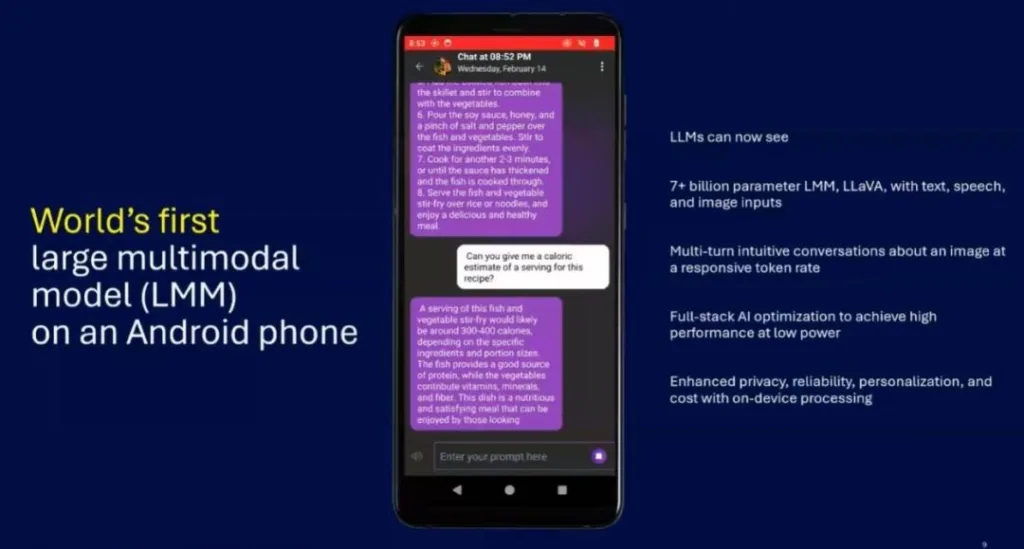

The chip giant highlighted its capability to locally run multimodal AI models with over 7 billion parameters on Android devices. Models like LLaVa, supporting text, speech, and image output, are among those enabled. The entire software stack has been fine-tuned to ensure optimal AI performance while minimizing power consumption. By processing everything locally, user privacy is upheld, while also unlocking personalized AI experiences.

Even on Windows laptops powered by the forthcoming Snapdragon X Elite chipset, users can input their voice, with the system swiftly processing and generating output. Qualcomm has incorporated LoRA (Low-Rank Adaptation) to locally produce AI images using Stable Diffusion on the device. This includes running the Stable Diffusion model with over 1 billion parameters.

Qualcomm vs Intel: Neural Processing Unit (NPU) Performance

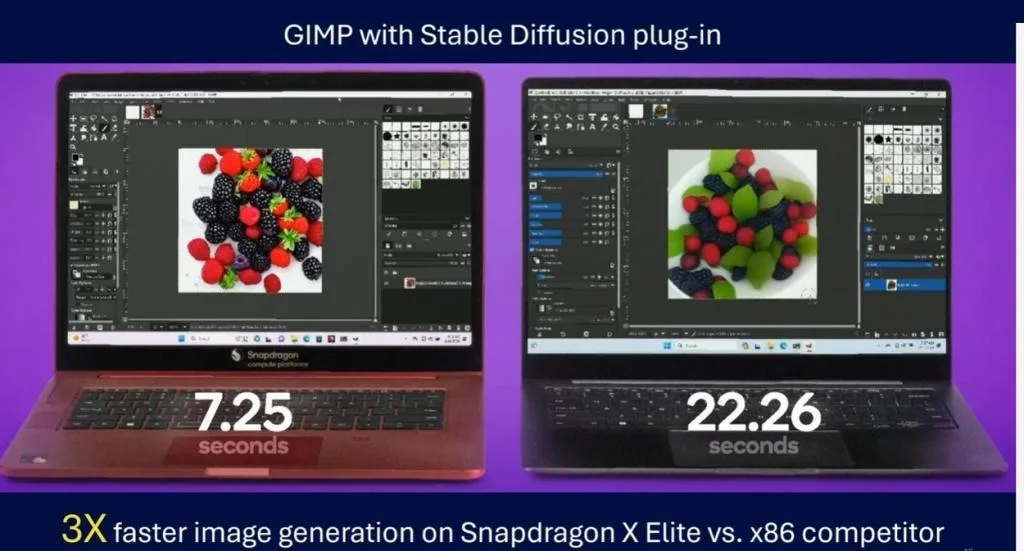

To showcase the potent on-device AI capability of Snapdragon X Elite, Qualcomm conducted an image generation test on GIMP using the Stable Diffusion plugin. The Snapdragon X Elite-powered laptop produced an AI image in 7.25 seconds, while the latest x86-powered Intel Core Ultra processor took 22.26 seconds.

Intel introduced the Meteor Lake architecture (used in Core Ultra CPUs) featuring multiple compute tiles, including an NPU tile (constructed on Intel 4 node) tailored for on-device AI tasks. However, despite this, the ARM-powered Snapdragon X Elite outperforms, delivering 3 times better AI performance, primarily due to its standalone NPU capable of 45 TOPS. When integrated with the CPU, GPU, and NPU, it achieves compute performance of up to 75 TOPS.

Lastly, Qualcomm is also advancing Hybrid AI experiences, distributing AI processing tasks across local compute and cloud resources for enhanced performance. Explore the Qualcomm AI Hub here (visit).

What are your thoughts on the Qualcomm AI Hub? Do you believe it will catalyze innovative on-device generative AI experiences? Share your opinions in the comments below.