At its Innovation 2023 event, Intel unveiled the 14th Gen Meteor Lake processor lineup, showcasing a plethora of enhancements and new features. Among the highlights are the Intel 4 process technology, a disaggregated architecture, and Intel Arc integrated graphics. While these upgrades are undoubtedly exciting, users will particularly welcome the inclusion of AI features in the Meteor Lake chips. Intel announced its initiative to introduce artificial intelligence to contemporary PCs by incorporating a brand-new Neural Processing Unit (NPU) into the 14th-Gen Meteor Lake processors. But what exactly is the NPU, and how will it benefit users? Let’s delve into the details below.

Intel Unveils First Client Chips with Integrated NPU

While artificial intelligence is prevalent online, relying on the cloud poses limitations such as high latency and connectivity issues. A Neural Processing Unit (NPU) addresses this by serving as a dedicated unit within the CPU for processing AI tasks. This means that instead of depending on the cloud, all AI-related processing is performed directly on the device itself. Intel’s new NPU is designed to do just that.

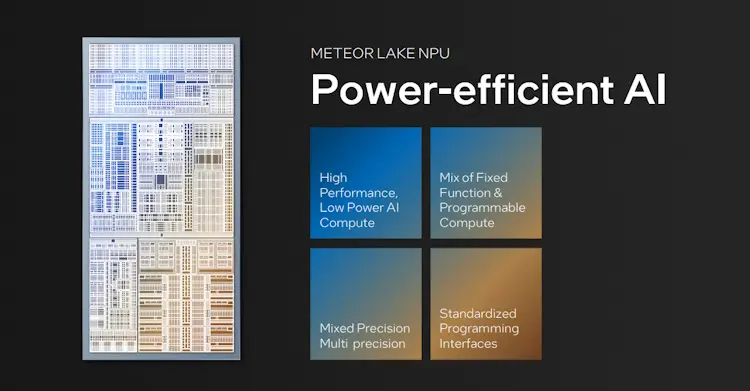

Although Intel has been exploring AI for some time, this marks the first instance of the company integrating an NPU into client-side silicon. The NPU integrated into Meteor Lake chips is a specialized, energy-efficient AI engine designed to effectively manage continuous AI tasks, whether online or offline.

Indeed, with the Intel NPU onboard, users can bypass the need for AI programs to perform heavy lifting over the internet. Tasks such as AI image editing, audio separation, and more can now be executed using the on-device NPU. This integration offers numerous advantages.

Firstly, users can anticipate lightning-fast processing and output, as there will be no latency associated with cloud-based processing. Additionally, Intel’s NPU contributes to enhancing privacy and security through the chip’s built-in security protocols. Consequently, users will have greater flexibility in employing AI on a daily basis, with improved convenience and peace of mind.

The Intel NPU Can Efficiently Switch Between Workloads

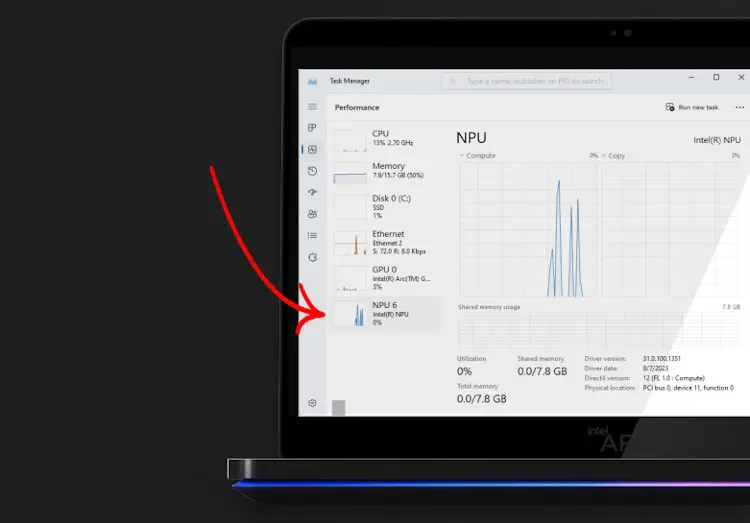

The Intel NPU is structured into two primary segments, each with its distinct functions: Device Management and Compute Management. The Device Management component supports a novel driver model developed by Microsoft known as the Microsoft Compute Driver Model (MCDM). This framework is essential for enabling AI processing directly on the device. Additionally, this driver model will allow users to see the Intel NPU listed in the Windows Task Manager, alongside the CPU and GPU, providing greater visibility into system performance.

Intel has collaborated with Microsoft over the past six years to develop the aforementioned driver model. This collaboration has empowered the company to ensure that the NPU efficiently executes tasks while dynamically managing power consumption. Consequently, the NPU can swiftly transition to a low-power state to sustain these workloads and revert back as needed.

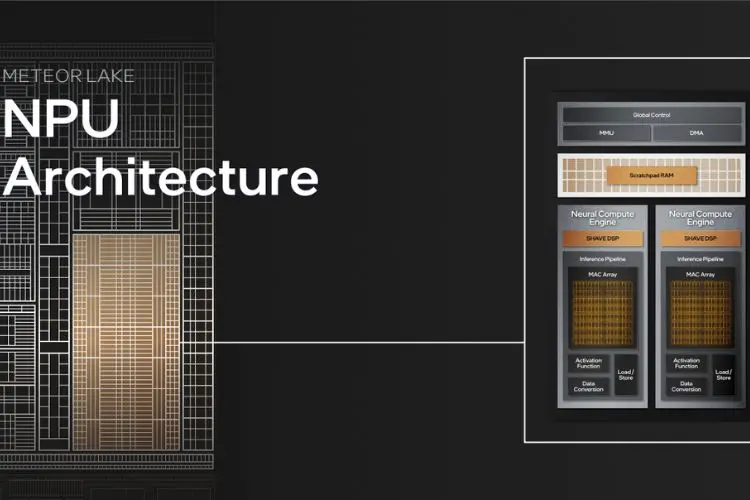

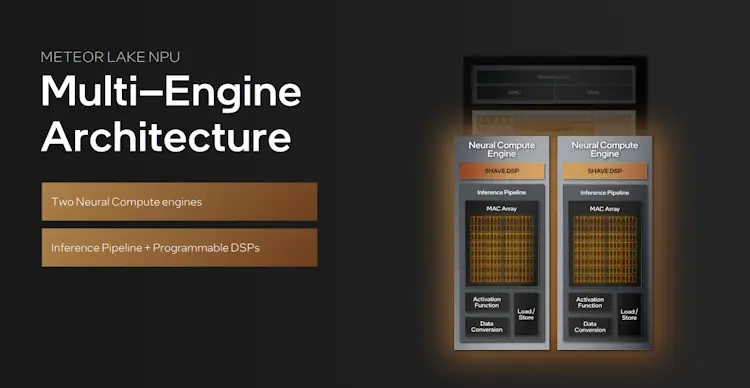

The Intel Meteor Lake NPU features a multi-engine architecture, housing two neural compute engines. These engines have the capability to operate on two separate workloads simultaneously or collaborate on the same workload. At the heart of the neural engine lies the Inference Pipeline, serving as a core component essential for the NPU’s functionality.

Intel’s NPU Enables On-Device AI Capability

While the intricacies of the NPU delve much deeper, users can anticipate numerous groundbreaking features with the new Meteor Lake architecture. The introduction of on-device AI processing to Intel’s consumer chips opens up a plethora of exciting possibilities for their applications. Additionally, the inclusion of the Intel 14th Gen Meteor Lake’s NPU in the Task Manager, though seemingly simple, suggests that consumers will have easy access to its capabilities. However, the extent to which users can leverage these advancements will only become evident upon the rollout of the chips.