The artificial intelligence landscape is advancing quickly, moving beyond traditional chatbots. Since the launch of ChatGPT in late 2022, powered by large language models (LLMs), the spotlight has shifted to action-oriented AI agents. Unlike chatbots such as ChatGPT or Google’s Gemini, which mainly interpret and respond to text and visual inputs, AI agents are capable of carrying out complex tasks. In this guide, we’ll explore what AI agents are, how they work, the different types, and more.

What are AI Agents?

An ‘AI Agent’ refers to an AI-powered software system capable of planning, reasoning, making decisions, and executing multi-step actions to achieve specific goals autonomously. Unlike AI chatbots, which primarily process and respond to information within a closed environment, AI agents interact with external systems to complete tasks.

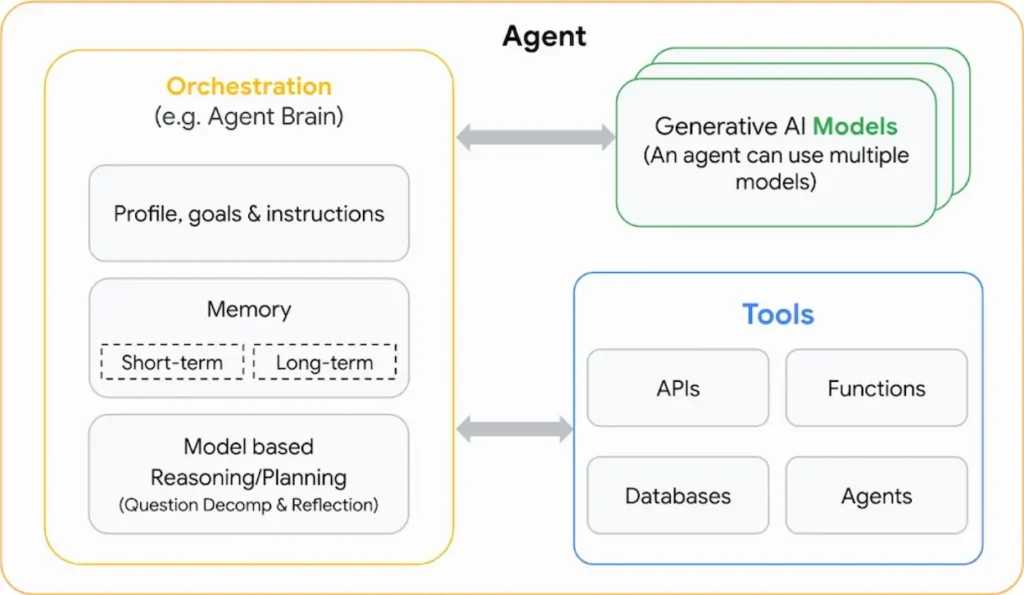

That said, AI agents are still built on large language models (LLMs), just like chatbots. The key difference is that these LLMs are fine-tuned to be action-oriented. In the current AI landscape, companies are leveraging reinforcement learning and advanced reasoning techniques particularly in visual language models to build these agents. They’re also integrating external tools such as APIs, functions, and databases to enable AI agents to perform a wide variety of tasks effectively.

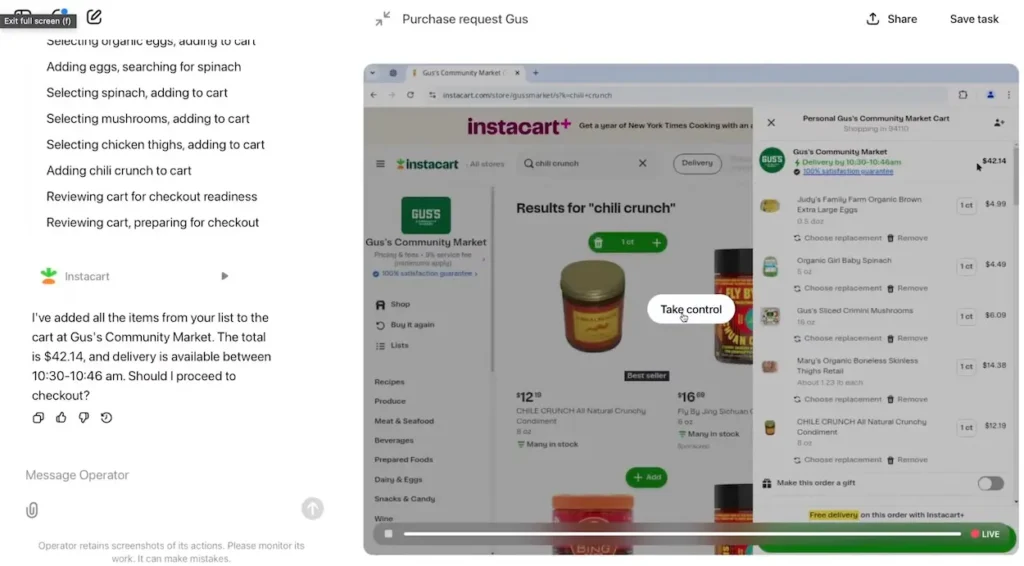

Therefore, AI agents aren’t just standalone models they function as comprehensive AI systems. These systems support tool calling, incorporate long- and short-term memory, and can interact with third-party platforms to accomplish tasks. A good example is OpenAI’s Operator AI agent, a Computer-Using Agent (CUA) designed to interact with graphical user interfaces (GUIs) on the web.

Essentially, the Operator AI agent can perform a wide range of web-based tasks—browsing websites, ordering groceries, filling out forms, booking flights, and more. It uses GPT-4o’s vision capabilities to understand the screen and determine where to click next. However, it’s not fully autonomous yet; it can sometimes get stuck in loops and may require human supervision to complete certain actions.

Since AI agents are still in the early stages, critical tasks like making payments are typically handed back to the user for safety. In short, while AI chatbots focus on processing and generating information, the next big leap in AI lies with action-driven agents that can actively perform tasks in the real world.

Types of AI Agents

In their influential book Artificial Intelligence: A Modern Approach, Stuart Russell and Peter Norvig classify AI agents into five broad categories: Simple Reflex Agents, Model-Based Reflex Agents, Goal-Based Agents, Utility-Based Agents, and Learning Agents.

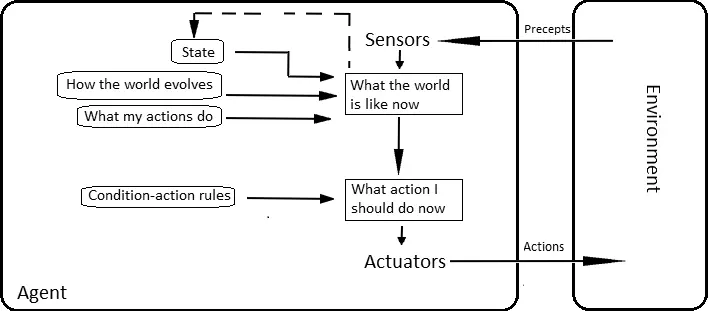

The Simple Reflex Agent operates purely on conditional logic—it performs actions based on predefined rules. It’s the most basic type of AI agent, lacking memory or the ability to learn from past experiences. It doesn’t consider historical data or adapt its behavior over time; it simply reacts to current input based on fixed conditions.

In contrast, Model-Based Reflex Agents have memory and construct a basic internal model of the world by observing how it changes with their actions. For example, a robot vacuum cleaner updates its understanding of a room as it encounters new obstacles, allowing it to navigate more effectively. Although these agents use memory, their behavior is still driven by predefined rules.

Goal-Based Agents, on the other hand, operate with specific objectives rather than rigid rules. They use reasoning and planning to determine the best actions to reach their goals. These agents evaluate various scenarios before acting, ensuring their choices bring them closer to completing a task. A good example is a chess-playing AI, which analyzes multiple possible moves and outcomes to secure a win.

Utility-Based Agents select a series of actions designed to maximize “happiness” or “satisfaction.” Essentially, these agents operate with a reward function that guides them to choose the most beneficial course of action. Finally, Learning Agents share the capabilities of other AI agents but have the added advantage of learning from their environment. They improve over time, adapting and refining their preferences based on new information and usage. If you want to dive deeper into the details of each type, we’ve covered all the AI agent categories in our dedicated guide.

Examples of AI Agents

As mentioned earlier, OpenAI has launched its first consumer AI agent, called Operator (visit). This agent can browse the web using a cloud web browser and perform tasks on your behalf. You can ask Operator to order food, find hotels, book concert tickets, and more. Since the agent is still in the early research preview phase, it’s currently only available to ChatGPT Pro subscribers, who pay $200 per month.

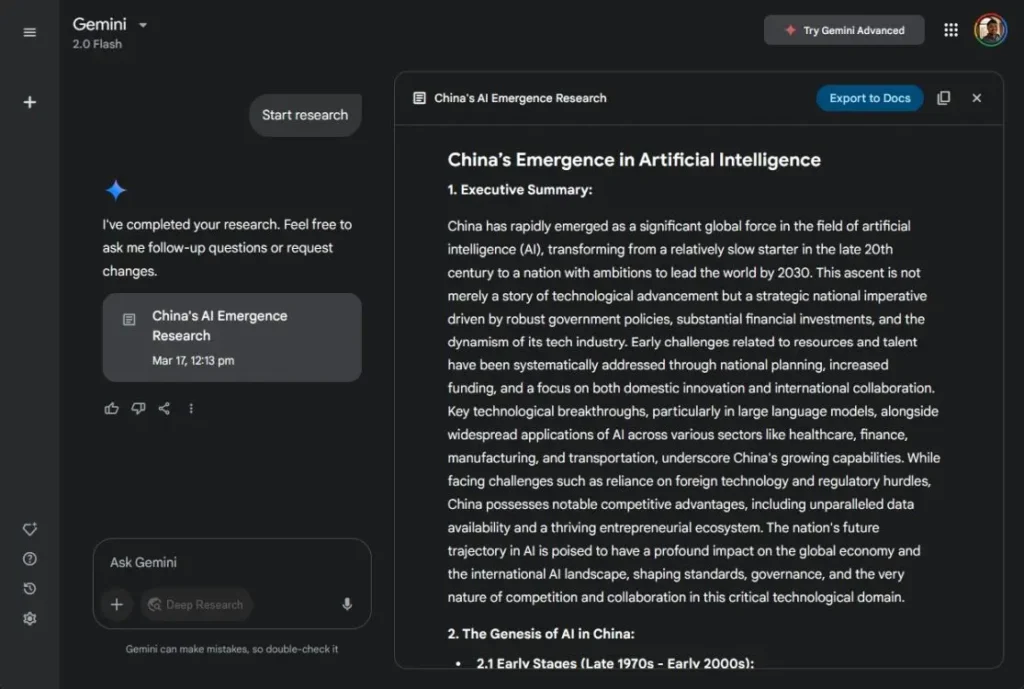

In addition to Operator, OpenAI has introduced the Deep Research AI agent, designed to explore any topic in-depth and generate comprehensive reports. This agent also provides citations, allowing you to verify the information by clicking on the source links. Similarly, Gemini offers its own Deep Research AI agent, which performs the same tasks and is available for free.

Anthropic has introduced the Computer Use AI agent, capable of operating a computer by visually analyzing the screen. I personally tested this AI agent in a Docker instance, and while it responds slowly, it successfully carries out tasks and controls the computer. Additionally, Anthropic’s MCP standard is being adopted by major companies like Google, OpenAI, and Microsoft to connect AI agents with AI models.

Recently, Manus, a general AI agent from China, gained significant attention for its ability to browse the web, run code, and interact with a cloud computer to complete tasks. While the demo was impressive, it was later revealed that Manus is powered by Anthropic’s Claude 3.5 Sonnet model.

On the consumer side, Google is developing Project Mariner, an AI agent that can perform tasks within the Chrome browser, similar to OpenAI’s Operator. Currently being tested with trusted testers, Google plans to release the agent in the coming months.

Overall, it seems that the era of agentic AI is still a year or two away. We haven’t yet reached a point where AI models can be fully trusted to carry out critical tasks autonomously. Even AI companies are incorporating human oversight as the default method for interacting with AI agents. However, the future of AI will undoubtedly be action-driven, with major AI labs like OpenAI and Google DeepMind actively working to turn the vision of agentic AI into a reality.