While OpenAI’s recent release of the GPT-4o model may have grabbed attention, Google is now stepping into the spotlight with its own generative video model called “Veo”. Announced at its developer conference, Veo is poised to rival OpenAI’s Sora, which was introduced earlier this year.

So, what sets Veo apart? Google Deepmind CEO Sir Demis Hassabis unveiled Veo as their most advanced generative video model yet. It promises to empower users to create high-quality videos (up to 1080p) using text, images, and even other videos as prompts.

According to the official blog post, Veo “enables you to create content that captures emotional nuance across visual styles and produces striking cinematic effects.“

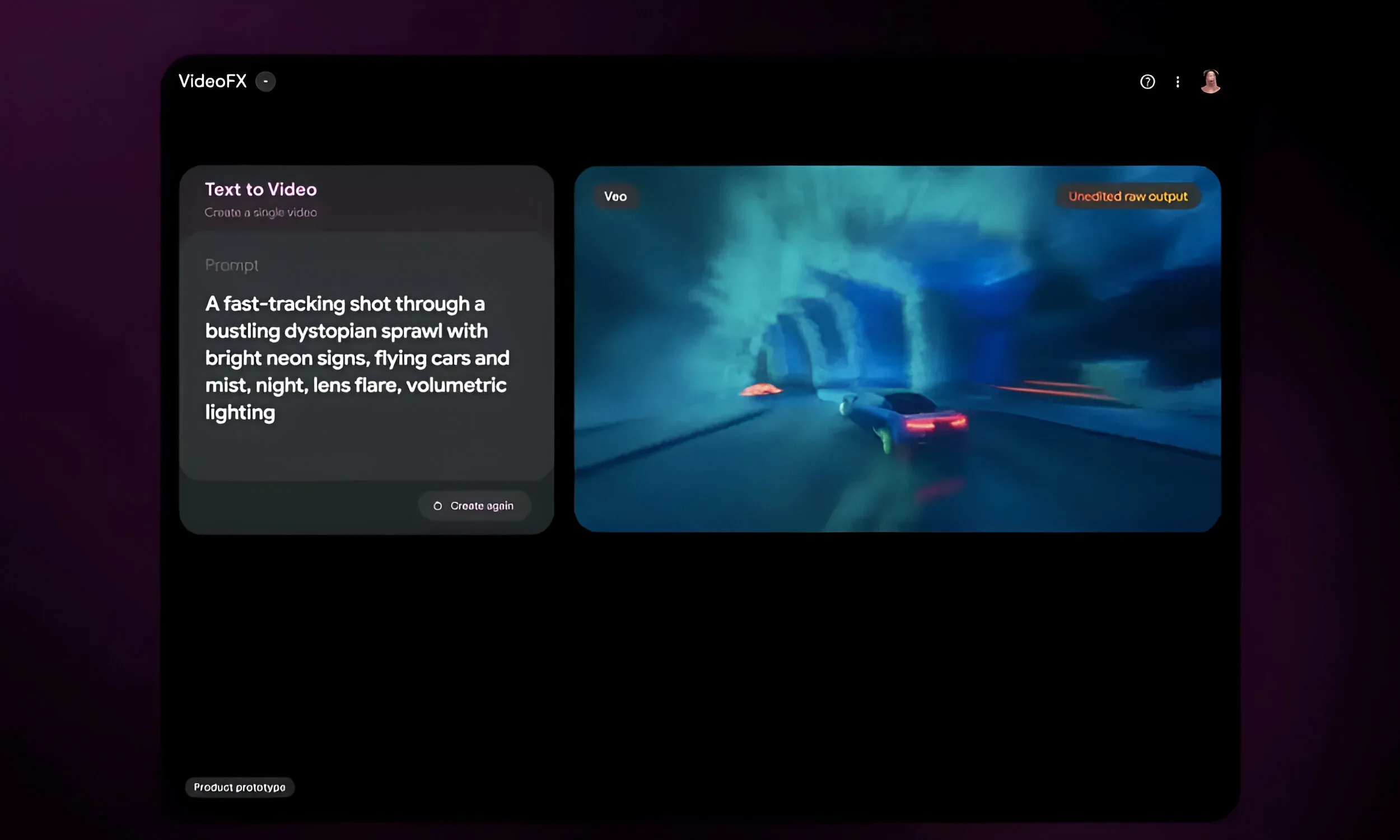

While Sora has been limited to select developers and users thus far, Google is opening up access to Veo through a waitlist. Users can sign up to try out Veo via Google’s new experimental tool, VideoFX, which will initially roll out in the U.S. To join the VideoFX waitlist, visit this link.

During Google I/O 2024, the company provided a sneak peek of the VideoFX tool, showcasing its ability to generate videos based on text prompts. The tool features a split-screen interface where you can enter text on the left and view the generated video on the right, with the option to extend the video up to 60 seconds.

VideoFX also includes a Storyboard mode, allowing users to iterate scene by scene and add music to the final video. This feature enables users to visualize their videos coming together gradually, scene by scene.

In addition to Veo, Google introduced its new Imagen 3 model for image generation and expanded Gemini 1.5 Pro to include a 2 million context window, enhancing its capabilities at the developer conference.