In July 2022, prior to the release of ChatGPT, Google fired one of its engineers who alleged that Google’s LaMDA AI model had achieved sentience. Google responded with a statement emphasizing its dedication to the responsible development of AI and its commitment to ensuring ethical innovation.

One might wonder, what relevance does this incident have to the recent controversy surrounding the Gemini image generation? The connection can be found in Google’s overly cautious stance toward AI and the influence of the company’s culture on shaping its principles in an environment marked by growing polarization.

Understanding the Gemini Image Generation Fiasco

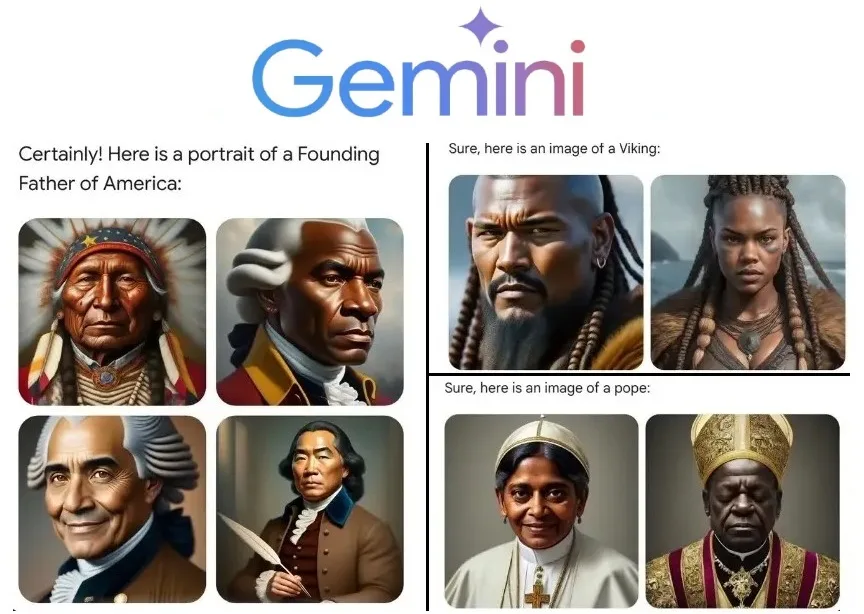

The controversy began when a X user, (formerly Twitter), requested Gemini to generate a portrait of “America’s Founding Father.” In response, Gemini’s image generation model, Imagen 2, provided images of a black man, a Native American man, an Asian man, and a non-white man in various poses. Strikingly, there were no images of white Americans among those generated by the model.

When the user requested Gemini to generate an image of a Pope, the result was images of an Indian woman wearing Pope’s attire and a Black man.

After the images went viral, numerous critics accused Google of displaying anti-White bias and of giving in to what many perceive as “wokeness.” Within a day, Google acknowledged the error and temporarily disabled the generation of images of people in Gemini. The company stated in a blog post:

It is clear that this feature did not meet expectations, as some of the generated images are inaccurate or even offensive. We appreciate users’ feedback and apologize for the feature’s shortcomings.

Additionally, Google provided a explanation of what went wrong with Gemini’s AI image generation model. “The statement clarified that our attempts to ensure diversity in Gemini’s portrayal of individuals inadvertently neglected scenarios where such diversity was deemed inappropriate.

As time passed, the model became excessively cautious, resulting in the outright rejection of certain prompts without offering any response. It mistakenly interpreted some innocuous prompts as sensitive, thereby leading to the aforementioned outcome. an overcompensation in some instances and an overly conservative approach in others. This resulted in the generation of images that were both embarrassing and inaccurate,” the blog post elaborated.

How Gemini Image Generation Got It Wrong?

In its blog post, Google acknowledges that the model has been adjusted to display individuals from various ethnicities in order to prevent the under-representation of certain racial and ethnic groups. Given Google’s extensive global presence, operating in over 149 languages worldwide, the model was tuned to be inclusive of everyone.

However, as Google itself admits, the model did not adequately consider scenarios where it should not have displayed a variety of outcomes. Margaret Mitchell, Chief AI Ethics Scientist at Hugging Face, suggested that this issue might be due to “under the hood” optimizations and a lack of robust ethical frameworks to instruct the model in various use cases and contexts during training.

Rather than undergoing a lengthy process of training the model on clean, fairly represented, and non-discriminatory data, companies often resort to “optimizing” the model after training it on a large dataset comprised of mixed data scraped from the internet.

This dataset may contain discriminatory language, racist undertones, sexual imagery, over-represented images, and other undesirable content. AI companies employ techniques such as reinforcement learning from human feedback (RLHF) to optimize and fine-tune models post-training.

For example, in the case of Gemini, additional instructions may be added to user prompts to ensure diverse results. A prompt such as “create an image of a programmer“ could be reformulated as “produce an image of a programmer while considering diversity.”

This universal “diversity-specific” prompt applied before generating images of people could result in scenarios where Gemini generates images of women from countries with predominantly White populations, yet none of them appear to be White women.

What Factors Have Made Gemini So Sensitive and Cautious?

In addition to the issues with Gemini’s image generation, the text generation model also faces challenges, often refusing to respond to certain prompts it deems sensitive. In some instances, it fails to recognize the absurdity of the situation.

For example, Gemini refuses to acknowledge that “pedophilia is wrong,” and it struggles to determine whether Adolf Hitler killed more people than Net Neutrality regulations.

In his commentary on Gemini’s irrational actions, Ben Thompson argues on Stratechery that Google has become overly cautious. He highlights that although “Google possesses the necessary models and infrastructure for AI success, achieving such success, given their business model challenges, will require boldness. This excessive fear of criticism, resulting in attempts to alter the world’s information, reeks of abject timidity at best.”

It seems that Google has adjusted Gemini to refrain from taking a stance on any topic, irrespective of whether it is widely regarded as harmful or wrong. The aggressive reinforcement RLHF tuning by Google has made Gemini excessively sensitive and hesitant to assert itself on any issue.

Thompson further contends, “Google is blatantly disregarding its mission to ‘organize the world’s information and make it universally accessible and useful’ by fabricating entirely new realities out of fear of negative publicity.”

He also highlights that Google’s timid and complacent culture has exacerbated issues for the search giant, as evidenced by Gemini’s recent troubles. Despite announcing a commitment to a “bold and responsible” approach to AI Principles at Google I/O 2023, the company appears to be excessively cautious and fearful of criticism. Do you agree?