The age of artificial intelligence has arrived, with Generative AI at the forefront of remarkable advancements in everyday technology. Numerous free AI tools can now help you generate stunning images, text, music, videos, and more within seconds. But what exactly is Generative AI, and how is it driving such rapid innovation? To find out, read our detailed explainer on Generative AI.

What is Generative AI?

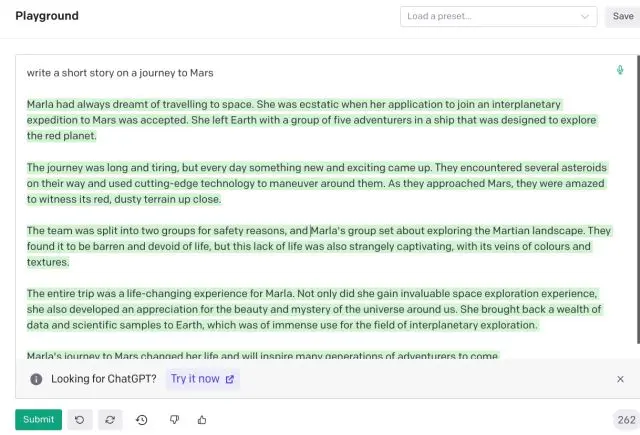

Generative AI is a type of AI technology that creates new content based on the data it has been trained on. This includes generating text, code, images, audio, videos, and synthetic data. Generative AI can produce a wide range of outputs based on user inputs, also known as “prompts.” Essentially, it is a subfield of machine learning that creates new data from an existing dataset.

When a large language model (LLM) is trained on a massive volume of text, it can generate coherent human language. The larger the dataset, the better the output quality. If the dataset is cleaned before training, the responses will be more nuanced and accurate.

Similarly, if a model is trained on a large collection of images with tagging, captions, and various visual examples, the AI can learn from these examples to perform tasks like image classification and generation. This advanced AI system, programmed to learn from examples, is known as a neural network.

There are various types of Generative AI models, including Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Generative Pretrained Transformers (GPTs), Autoregressive models, and more. Below, we will briefly discuss these different generative models.

Currently, Transformer-based models, such as GPT-4o, GPT-4, GPT-3.5 (ChatGPT), Gemini 1.5 Pro (Gemini), DALL-E 3, LLaMA (Meta), and Stable Diffusion, have gained significant popularity. OpenAI has also recently demonstrated its Sora text-to-video model.

These user-friendly AI interfaces are all built on the Transformer architecture. Therefore, in this explainer, we will primarily focus on Generative AI and GPT (Generative Pretrained Transformer).

What Are the Various Types of Generative AI Models?

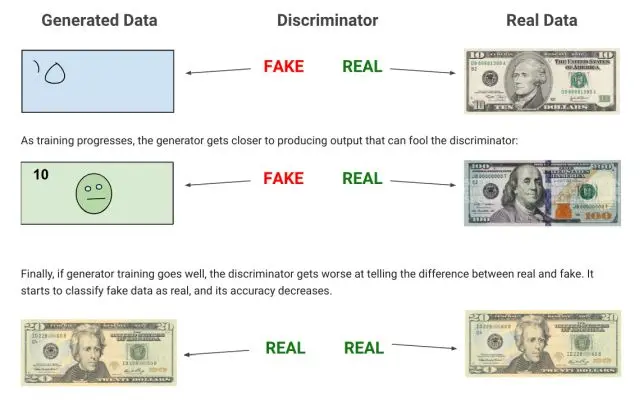

Among all the Generative AI models, GPT is favored by many. However, let’s start with GAN (Generative Adversarial Network). In this architecture, two parallel networks are trained: one generates content (called the generator) and the other evaluates the generated content (called the discriminator).

The primary goal is to pit these two neural networks against each other to produce results that closely resemble real data. GAN-based models are mostly used for image-generation tasks.

Next, we have the Variational Autoencoder (VAE), which involves encoding, learning, decoding, and generating content. For example, if you have an image of a dog, it analyzes the scene for attributes like color, size, and ear shape, learning the characteristics of a dog. It then recreates a simplified image using key points, ultimately generating a final image with added variety and nuances.

Moving on to Autoregressive models, these are similar to Transformer models but lack self-attention mechanisms. They are mainly used for text generation, producing a sequence and then predicting the next part based on the sequences generated so far. Additionally, we have Normalizing Flows and Energy-based Models. Finally, we will delve into the popular Transformer-based models in detail below.

What Is a Generative Pretrained Transformer (GPT) Model

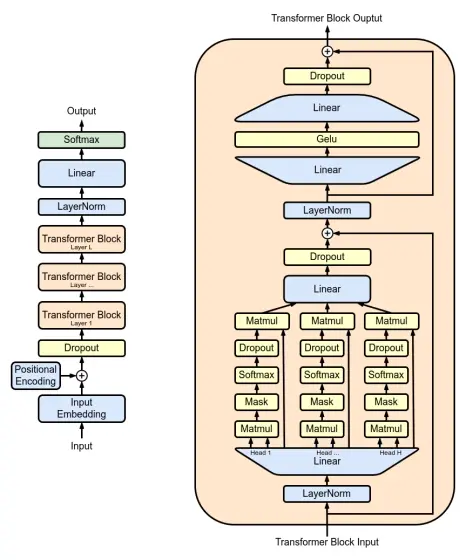

Before the arrival of the Transformer architecture, Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs) like GANs and VAEs were widely used in Generative AI. In 2017, researchers at Google published a groundbreaking paper titled “Attention is All You Need” (Vaswani, Uszkoreit, et al., 2017), which revolutionized the field of Generative AI and paved the way for large language models (LLMs).

Following this, Google introduced the BERT model (Bidirectional Encoder Representations from Transformers) in 2018, which implemented the Transformer architecture. Around the same time, OpenAI released its first GPT-1 model based on the Transformer architecture.

What made the Transformer architecture a favorite for Generative AI was its introduction of self-attention, as aptly titled in the seminal paper. Self-attention was a key ingredient missing in earlier neural network architectures.

Essentially, the Transformer predicts the next word in a sentence using this self-attention mechanism. It closely analyzes neighboring words to grasp context and establish relationships between them.

Through this process, the Transformer builds a comprehensive understanding of language and uses this understanding to predict subsequent words accurately. This mechanism is known as the Attention mechanism.

Regarding LLMs, they have been critically labeled as “Stochastic Parrots” (Bender, Gebru, et al., 2021) because these models primarily mimic random words based on probabilistic patterns they have learned. They do not generate responses based on logical reasoning or genuine understanding of the text.

Regarding the term “pretrained” in GPT, it signifies that the model has undergone extensive training on a vast corpus of text data before incorporating the attention mechanism. This pre-training equips the model with knowledge of sentence structures, grammar, patterns, facts, phrases, and more. It allows the model to acquire a firm understanding of language syntax.

How Google and OpenAI Approach Generative AI?

Both Google and OpenAI approach Generative AI using Transformer-based models: Google with Gemini and OpenAI with ChatGPT. However, their approaches differ in key aspects. Google’s latest Gemini utilizes a bidirectional encoder, incorporating a self-attention mechanism and a feed-forward neural network, which considers all surrounding words.

Gemini aims to comprehend the context of a sentence and generate all words simultaneously. Google’s strategy revolves around predicting missing words within a given context.

In contrast, OpenAI’s ChatGPT utilizes the Transformer architecture to predict the next word in a sequence, proceeding from left to right. It operates as a unidirectional model focused on generating coherent sentences, continuing predictions until it completes a sentence or paragraph.

This difference may explain why Gemini can generate text more quickly than ChatGPT. Despite this, both models rely on the Transformer architecture as their foundational framework for providing Generative AI interfaces.

Applications of Generative AI

Generative AI finds extensive applications beyond text, extending into areas such as image and video generation, audio synthesis, and more. AI chatbots like ChatGPT, Gemini, and Copilot harness Generative AI capabilities.

It is also employed in autocomplete features, text summarization, virtual assistants, translation services, and other functionalities. In music generation, examples include Google’s MusicLM and Meta’s recent release of MusicGen.

Additionally, from DALL-E 3 to Stable Diffusion, all utilize Generative AI to produce realistic images based on text descriptions. In video generation, models such as Runway’s Gen-2, StyleGAN 2, and BigGAN utilize Generative Adversarial Networks (GANs) to produce realistic videos.

Moreover, Generative AI is applied in 3D model generation, with popular models including DeepFashion and ShapeNet.

Furthermore, Generative AI can significantly aid in drug discovery by designing novel drugs tailored to specific diseases. Models such as AlphaFold, developed by Google DeepMind, exemplify advancements in this field. Additionally, Generative AI can be utilized for predictive modeling to forecast future events in finance and weather.

Limitations of Generative AI

Generative AI possesses significant capabilities but is not without its shortcomings. Firstly, it necessitates a substantial amount of data to train models effectively. This can pose challenges for small startups that may struggle to access high-quality data. Companies like Reddit, Stack Overflow, and Twitter have restricted access to their data or imposed high fees for access.

Recently, The Internet Archive reported an hour of website inaccessibility due to an AI startup aggressively scraping its site for training data.

In addition, Generative AI models face significant criticism for their lack of control and potential biases. Models trained on skewed internet data may disproportionately represent certain segments of the community, as evidenced by AI photo generators predominantly rendering images with lighter skin tones.

Moreover, there is a major concern regarding the use of Generative AI models for creating deepfake videos and images. As previously mentioned, these models do not comprehend the meaning or implications of their outputs and typically mimic based on the training data provided.

Despite best efforts and attempts to align its use, companies will likely struggle to mitigate the limitations of Generative AI, which include issues such as misinformation, deepfake generation, jailbreaking, and sophisticated phishing attempts leveraging its persuasive natural language capabilities.