During I/O 2024, Google unveiled numerous new AI models, upcoming projects, and a plethora of AI features set to enhance its products. However, what particularly grabbed my attention was the Gemini 1.5 Flash model. It boasts impressive speed and efficiency, along with multimodal capabilities and a context window of up to 1 million tokens (2M via waitlist).

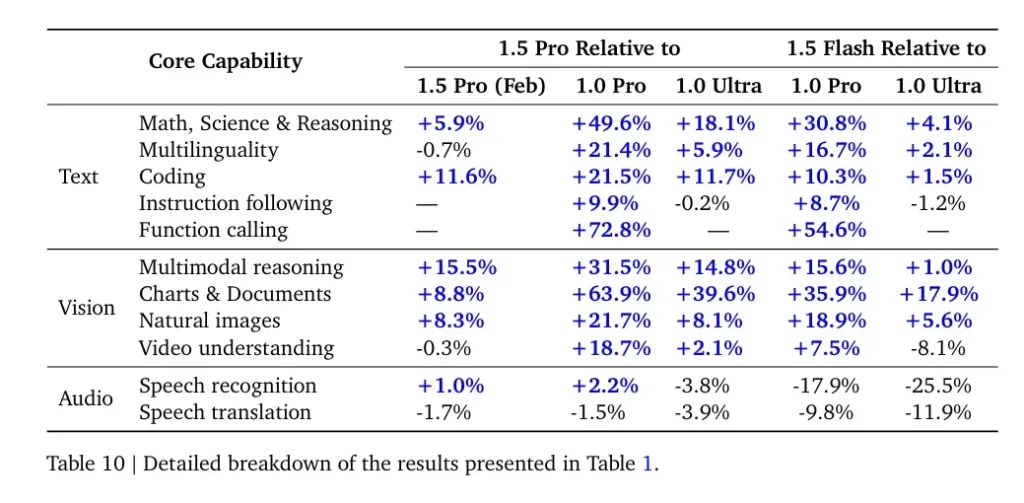

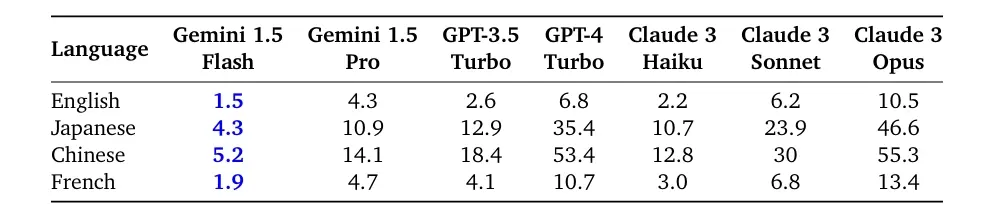

Despite its compact size – Google hasn’t disclosed its parameter size Gemini 1.5 Flash achieves commendable scores across all modalities: text, vision, and audio. According to Google’s Gemini 1.5 technical report, this model outperforms much larger counterparts like 1.0 Ultra and 1.0 Pro in numerous aspects. Its only areas of slight underperformance are in speech recognition and translation compared to the larger models.

While Gemini 1.5 Pro operates as a sparse MoE model (Mixture of Experts), Gemini 1.5 Flash functions as a dense model, online distilled from the larger 1.5 Pro model to enhance quality. Moreover, in terms of speed, the Flash model surpasses all other smaller models available, including Claude 3 Haiku, running on Google’s custom TPU.

And its pricing is remarkably affordable. Gemini 1.5 Flash is priced at $0.35 for input and $0.53 for output to process 128K tokens, or $0.70 and $1.05 for 1 million tokens. This pricing is significantly lower than that of Llama 3 70B, Mistral Medium, GPT-3.5 Turbo, and, of course, larger models.

For developers in need of inexpensive multimodal reasoning with a larger context window, the Flash model is an excellent option. Here’s how you can experiment with Gemini 1.5 Flash at no cost.

Using Gemini 1.5 Flash For Free

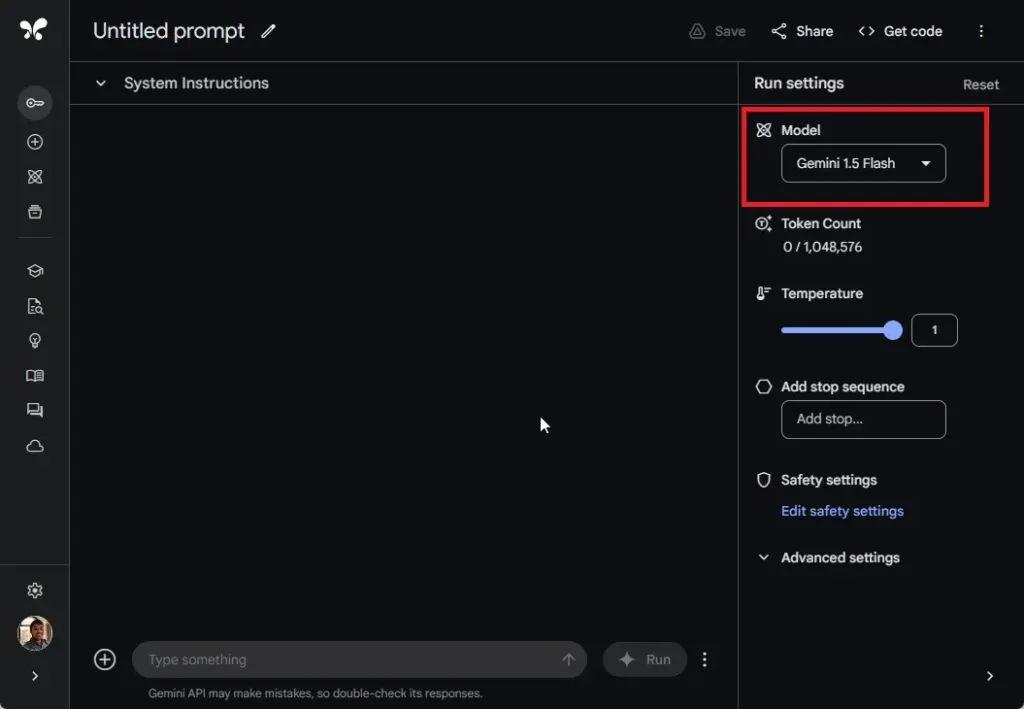

- Visit aistudio.google.com (visit) and sign in using your Google account.. There’s no waitlist to access the Flash model.

- Next, select the “Gemini 1.5 Flash” model from the drop-down menu.

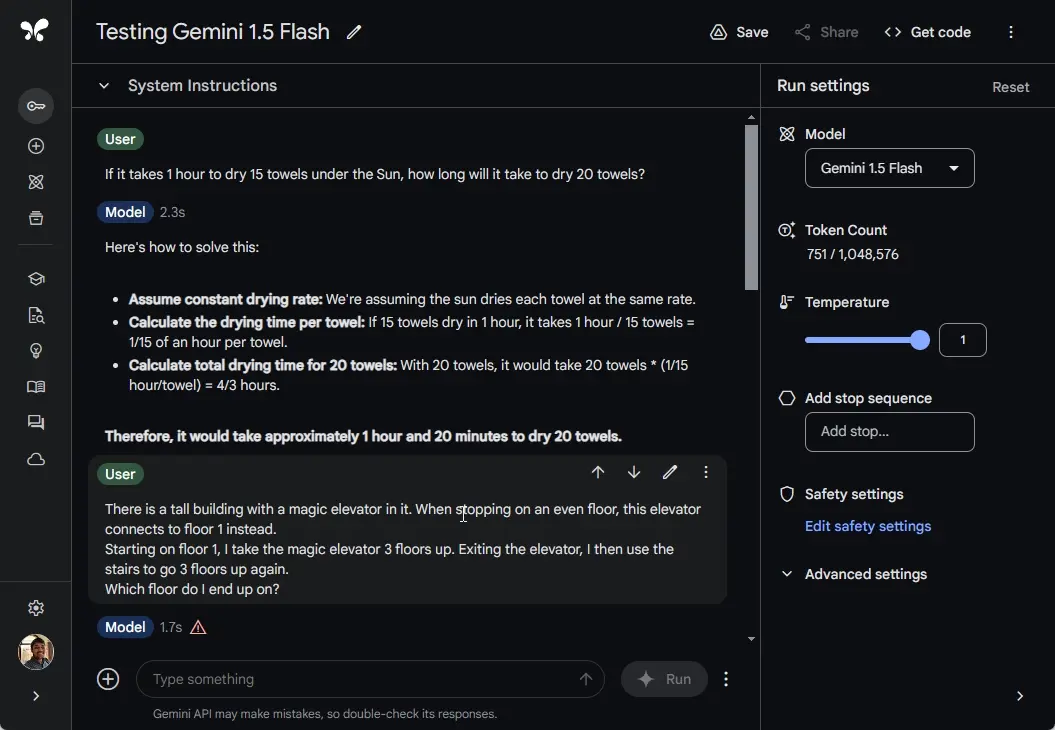

- Now, you can begin conversing with the Flash model. Additionally, you have the option to upload images, videos, audio clips, files, and folders.

First Impressions of Gemini 1.5 Flash

Although Gemini 1.5 Flash isn’t considered a state-of-the-art model, its primary advantages lie in its rapid speed, efficiency, and affordability. While it may not match the capabilities of Gemini 1.5 Pro or larger models from OpenAI and Anthropic, it offers a compelling option for tasks where speed and cost are paramount. I experimented with various reasoning prompts that I had previously utilized to compare ChatGPT 4o and Gemini 1.5 Pro.

It provided only one correct response out of five questions, suggesting that its commonsense reasoning capabilities might be limited. However, for applications demanding multimodal capability and a sizable context window, it could still be suitable for your needs. Additionally, Gemini models excel in creative tasks, offering value to both developers and users.

In summary, no AI model currently available combines speed, efficiency, multimodality, a large context window, and near-perfect recall, all at a very low cost. So, what are your thoughts on Google’s latest Flash model? Share your opinions in the comments below.