YouTube has taken a significant step regarding the presence of AI-generated content on its platform, acknowledging its status as the largest hub for video sharing and creator content. With an influx of millions of videos uploaded daily, the team has recently made a crucial decision about the treatment of AI-generated content in 2023.

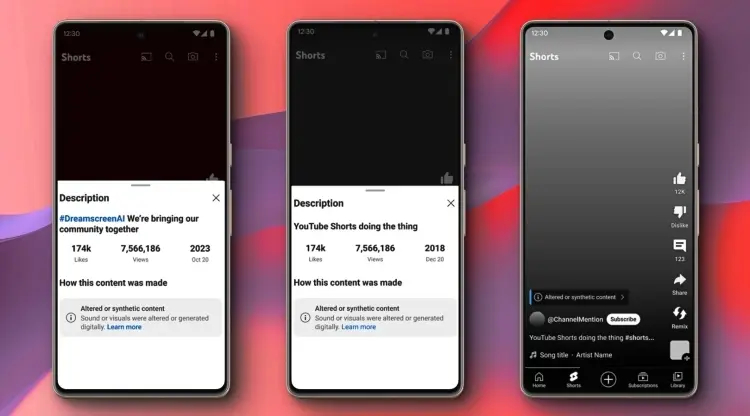

YouTube’s recent blog post outlined forthcoming changes in content disclosure rules. Creators will now be obligated to specify if their content is AI-generated. This disclosure will prompt YouTube to display a label indicating “altered or synthetic content” for videos containing AI-generated segments. Similar notifications will be implemented for content within YouTube Shorts.

The company also emphasized that in content discussing “sensitive topics” such as politics, health, and elections, this label will be displayed more prominently.

In instances where the creator doesn’t disclose the use of AI, the videos might be flagged as AI-generated by YouTube’s detection tools. The platform plans to utilize AI for improved content moderation, intending to address emerging threats with the help of generative AI technology. In essence, YouTube is employing AI to detect AI-generated content that hasn’t been disclosed by creators.

Why AI-Generated Content Poses a Challenge for YouTube

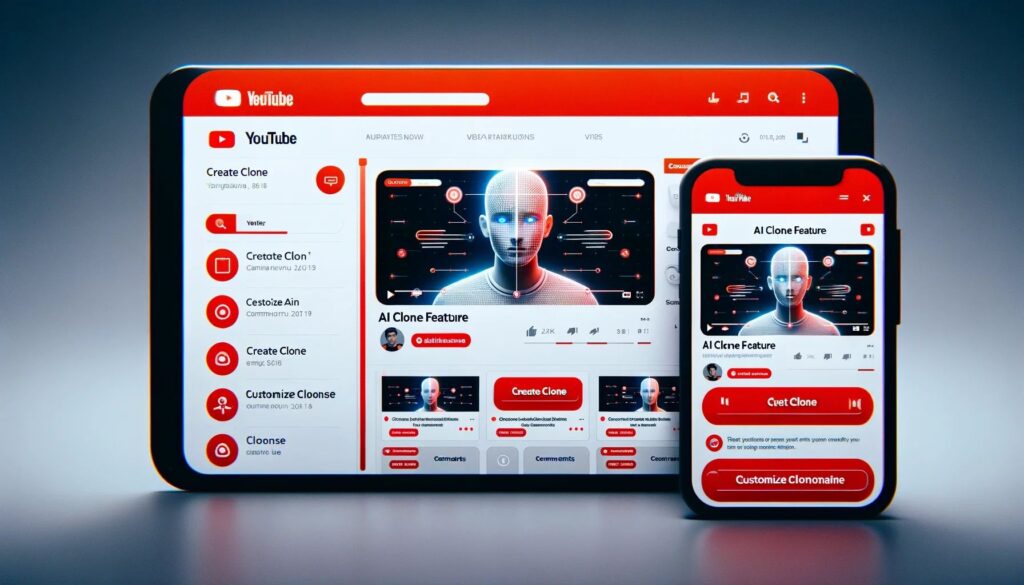

The implementation of these rules will gradually roll out in the upcoming months. YouTube plans to inform viewers when they’re watching content, be it Shorts or long-form videos that involves AI-generated elements. Additionally, new tools will be provided to creators to set disclosures as needed.

The surge in new creators utilizing AI tools for content creation has been notable. The use of AI-generated elements in storytelling, particularly in Shorts and YouTube videos, has expanded. However, the potential misuse and misleading aspects of AI-generated content pose significant concerns and challenges within the platform.

AI-generated content indeed raises concerns for original artists and creators, potentially affecting real-life situations. YouTube emphasizes the significance of disclosure requirements and new content labels, “especially in discussions around sensitive topics like elections, ongoing conflicts, public health crises, and public officials. These regulations aim to address the potential impact of AI-generated content on critical real-world subjects.“

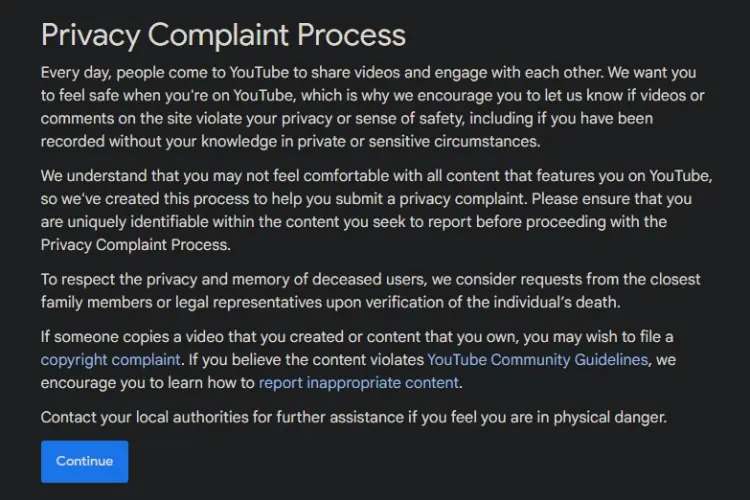

YouTube’s upcoming privacy complaint process marks a significant improvement, providing users the means to report and request the removal of content featuring their face or voice, particularly in cases where AI-generated videos are involved. Additionally, the platform will “empower music partners to request the removal of AI-generated music content that replicates an artist’s distinct singing or rapping voice.“

What do you make of YouTube’s recent policy changes aimed at addressing AI-generated content concerns? These adjustments seem to provide a way for individuals and creators to report any misuse of their face or voice in AI-generated content. Feel free to express what you think in the comments section below!

0 Comments